From Legacy to Leading-Edge: Navigating the Modernization Journey [Part 1]

Just a heads up: When I finished writing this post, it turned out to be quite long. So, I decided to split it into two parts, and this is the first one.

Introduction

Modernization can evoke mixed feelings among engineers. While some dread the term, others view it as a lifeboat. In the context of IT, modernization refers to the process of making the development and maintenance of older, or “legacy” systems easier, more efficient, and more enjoyable. As engineers (and AI) produce an ever-increasing amount of code, we inevitably face more code to extend and maintain.

It is essential to recognize that software tends to degrade over time, much like an aging vehicle. Just as cars develop wear and tear - security vulnerabilities may arise, performance may lag due to increasing data volume or load, and technical debt can hinder the development of new features - software systems require regular upkeep. If software owners do not prioritize maintenance and support, these shortcomings accumulate until modernization becomes the only viable solution.

Modernization is a complex process that encompasses not only technical updates but also organizational changes. Given the breadth of this topic, this post will primarily focus on the technical aspects of modernization. For those interested in a more comprehensive view, I recommend the book “Architecture Modernization: Socio-technical Alignment of Software, Strategy, and Structure” which covers both technical and socio-technical areas of modernization. Here, I will share my experiences, challenges, and lessons learned while leading a modernization project with one of my company’s clients.

Context

One of our clients in the global travel retail sector is dedicated to improving the digital customer experience across their platforms, including point-of-sale systems and mobile applications that enable users to place orders while in-flight. They partnered with us to create a custom mobile application. Following a successful collaboration, they chose to expand our partnership with additional projects across various platforms.

I was brought on board as a software architect and manager to replace an in-house architect who was departing. With just a two-week transition period to gather knowledge from the outgoing architect, I assumed responsibility for leading the development, maintenance, and support of multiple custom systems, primarily within the API Gateway workstream. Reporting directly to senior leadership, I was tasked with several priorities:

- Establishing best engineering practices.

- Enhancing technical aspects of the systems.

- Balancing improvements of existing systems with the need to develop new functionalities to meet business demands.

And so, my work began.

Modernization Roadmap

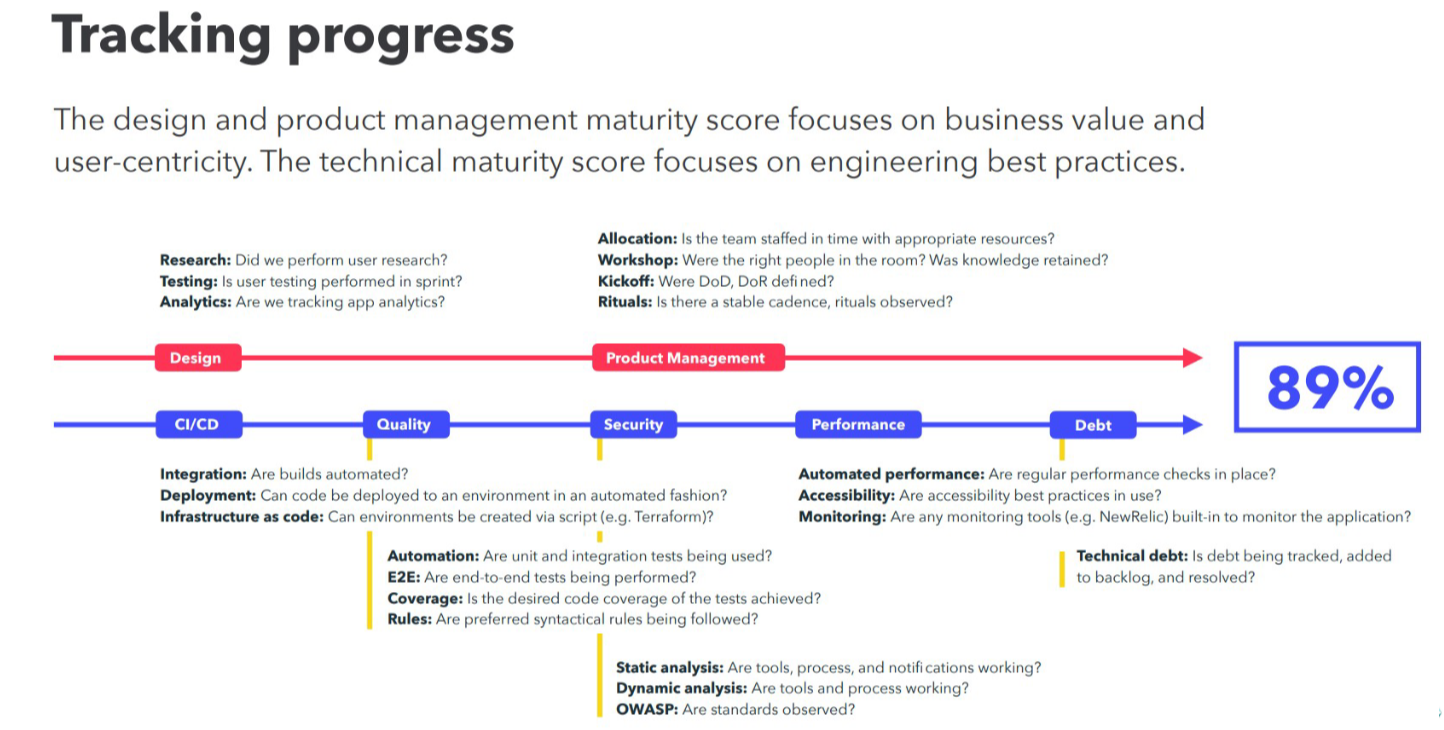

One of my primary goals was to improve the technical aspects of the systems. To accomplish this, I needed to evaluate what had to be improved to deliver more value to the client. Fortunately, I had a valuable tool at my disposal: the “Technical Delivery Maturity Framework” which helps engineering teams assess current software state against industry best practices.

Through this framework, I analyzed the system, identified gaps, and outlined a list of improvements. Additionally, I included two crucial elements for discussions with business stakeholders: estimated duration and potential business impact. Having concrete numbers facilitated conversations about budgeting and timelines as well as priorities. After a successful discussion, we aligned with stakeholders and once additional engineers were onboarded to tackle the backlog, we embarked on our modernization journey and began addressing several challenges.

Challenge #1: Process

Right from the start of onboarding, I faced a significant challenge related to processes - or rather, the lack thereof. The first workstream I was introduced to was the API Gateway team, responsible for managing an AWS-based API Gateway solution that exposes a subset of internal APIs to external partners. Prior to my arrival, the situation resembled a bustling airport with no traffic control; two engineers handled both support and maintenance tasks while attempting to deliver new functionality without established procedures. Stakeholders would come directly to engineers, leading to prioritization based on the volume of incoming requests rather than strategic planning.

This chaotic scenario caused timelines to be out of control and left both stakeholders and engineers dissatisfied, much like travelers stranded at an airport without gate assignments. To address this, as we onboarded more engineers targeted toward modernization, we separated support and delivery tasks. Some engineers would primarily handle support while others focused solely on delivery. We then created distinct workflows for each group: a Kanban and ticketing system for the support team and Scrum with sprint boards for the delivery team.

This division enabled the support team to target metrics like mean time to resolve issues, while the delivery team could refine and estimate tasks, track velocity, and plan work in sprints. Just as an airport optimization leads to smoother travel experiences, our streamlined operations ensured that new functionality was developed systematically rather than rushed through support channels. This alignment increased satisfaction for both stakeholders and engineers, helping everyone work toward common goals within established timelines.

Challenge #2: Codebase Maturity

When we began examining the API Gateway codebase - a collection of AWS Lambda handlers - we were taken aback by its condition. It was like discovering an old car engine choked with dirt and rust; the JavaScript code was convoluted and challenging to understand, debug, and modify, and it lacked types, meaning no build-time checks were in place to enhance code clarity and trustworthiness. Since the handlers were designed for an AWS serverless environment, they were tightly coupled to AWS services, making local development and testing impossible. Any changes required developers to have their own separate AWS environments for manual verification.

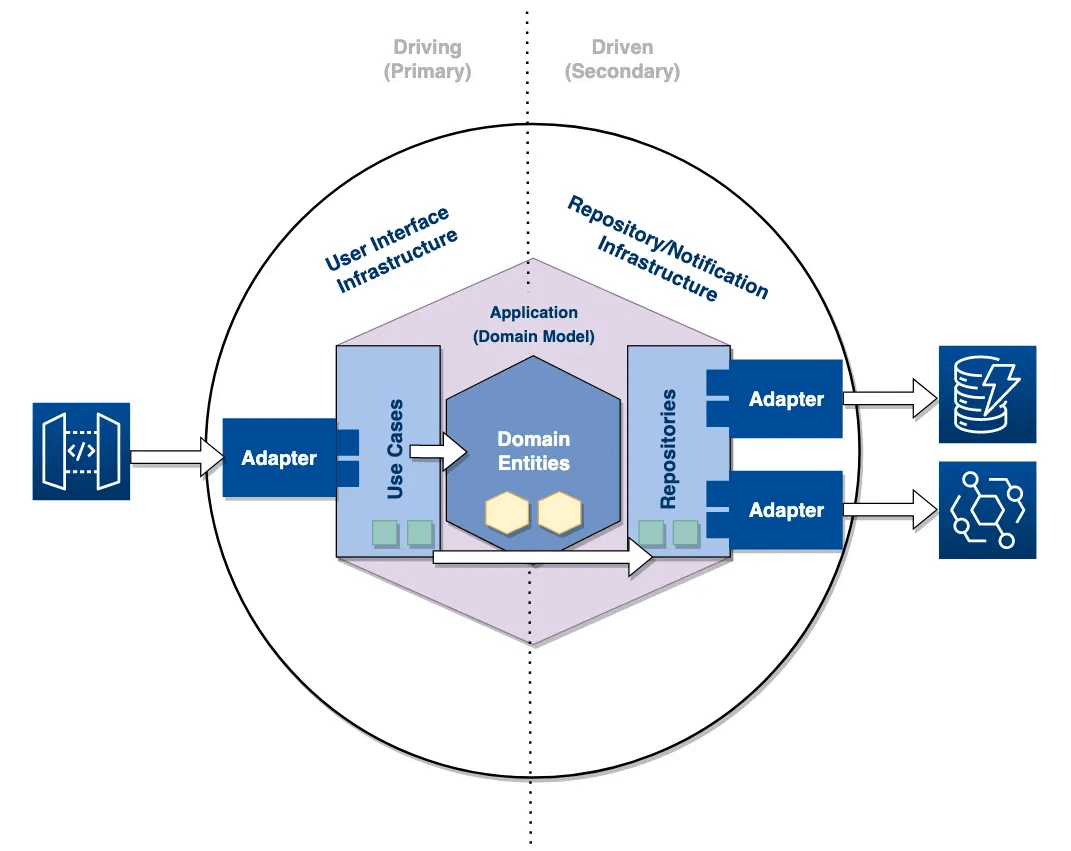

You might be wondering: were there any automated tests? As you guessed, there were none. Our team accepted the challenge and began modernizing the codebase. We first migrated it from JavaScript to TypeScript, enabling strict type checking that can detect defects at build time. We also minimized the dependency on AWS by applying clean architecture principles, effectively separating our domain logic from the infrastructure layer, allowing future flexibility.

To facilitate local development and testing, we established a local infrastructure layer, reducing code testing lead times from 10 minutes to just 10 seconds. During the migration, we added automated unit and integration tests for all new code. Additionally, by creating an API project blueprint and common packages for shared features, we significantly shortened the setup time for new projects from days to just hours.

Challenge #3: Documentation

During my onboarding, I experienced a two-week transition period during which the departing architect spent most of their time writing documentation. It’s similar to trying to navigate a new city without a map; prior to this effort, there was hardly any existing documentation. Some knowledge was recorded, but significant gaps remained. Testing approaches were undefined, source control rules were vague, and the release process operated on tribal knowledge. There were no standardized API development guidelines, leading to inconsistencies in naming conventions, parameter names, and path structures across APIs. The only viable solution was to define and document the missing information.

Throughout the modernization effort, we established multiple key documents:

- Testing Strategy

- Source Control Strategy

- Release Process and Cadence

- API Development Guidelines

- How-To Guides

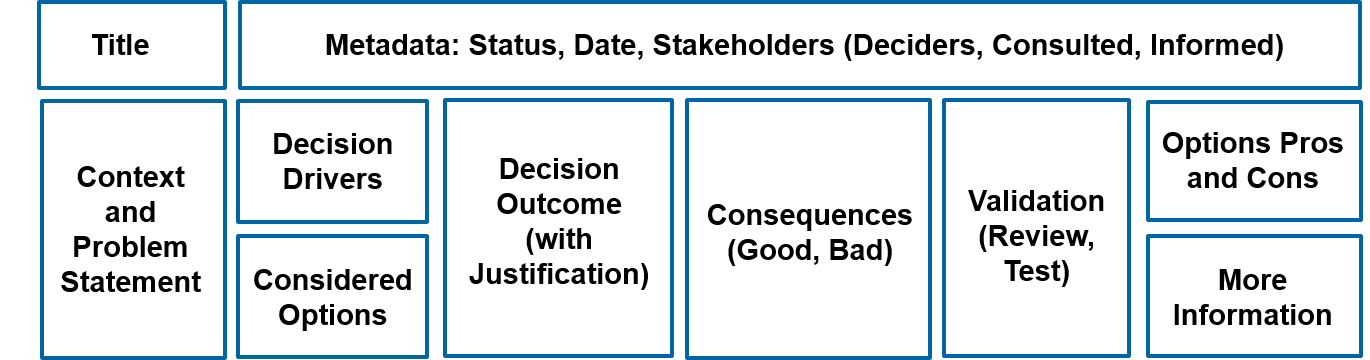

- Architecture Decision Records

- Technical Solution Documents

Architecture Decision Records proved particularly valuable for documenting significant technical choices. They not only served engineers needing to reference past decisions but also provided client with a clear understanding of why and how choices were made during the project’s delivery.

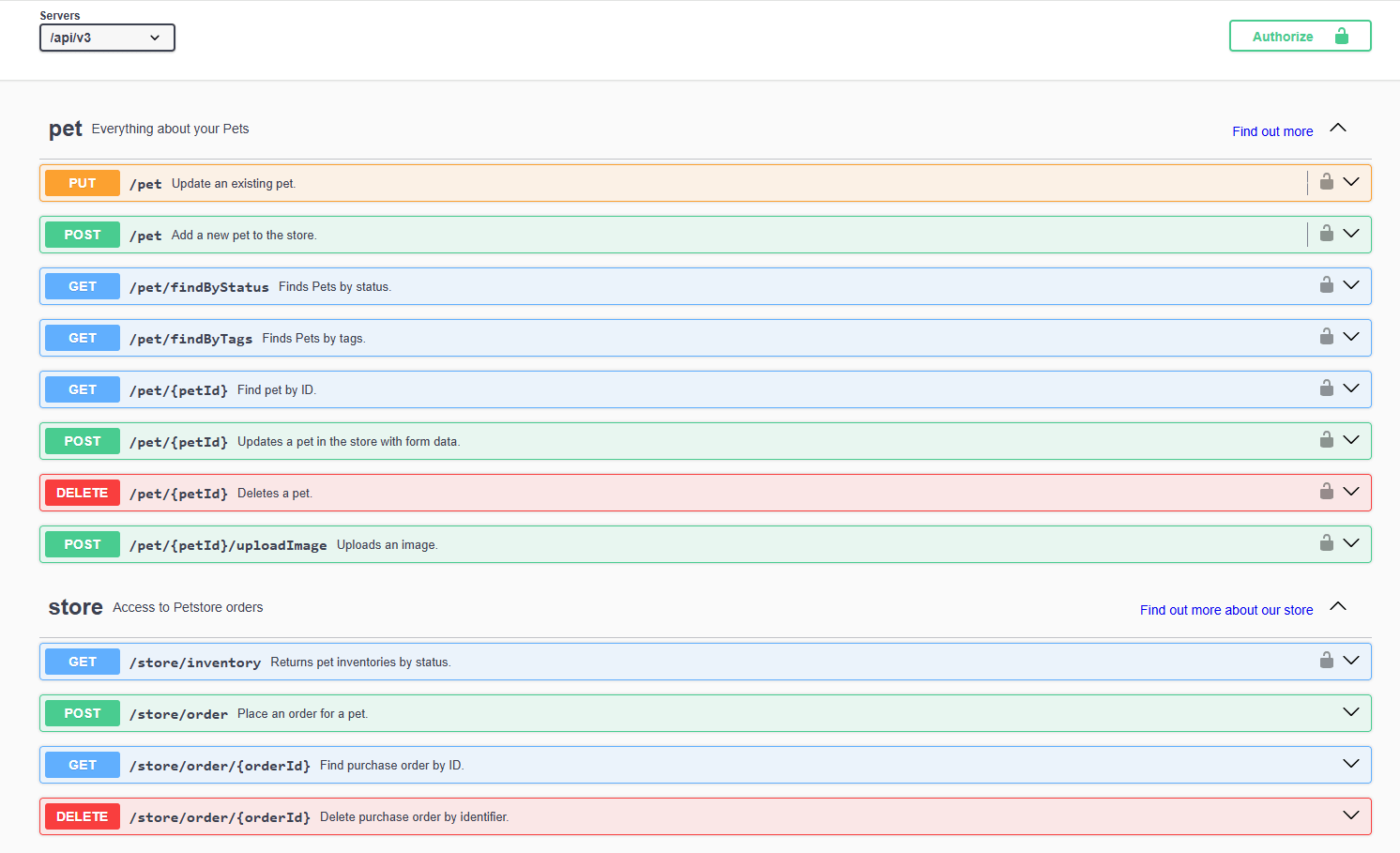

Furthermore, given that the API Gateway team provided APIs to external partners, effective API documentation was crucial, much like a comprehensive guidebook for a traveler. Previously, API documentation was managed via OpenAPI documents, distributed to partners manually. Whenever an API changed or new ones were added, this document had to be manually updated and sent to each partner via email - an tedious process.

We successfully automated this by creating a dedicated developer portal that generates OpenAPI documentation automatically from TypeScript types and annotations. This documentation is uploaded to static files storage, allowing the developer portal to render it as HTML pages. We also supplement documentation with markdown documents for formatted text and images. Each partner is assigned one or more users who can authenticate and access the latest documentation swiftly, thereby eliminating the need for manual updates.

Stay Tuned

That’s it for this post! Keep an eye out for part two.