Automating security with DevSecOps

What is DevSecOps and why do we need it?

Adaptation of DevOps practices enables rapid software development due to more efficient collaboration between development and operations teams. Prior to DevOps, those teams worked in silos, avoided mutual communication and did not take full ownership of the whole software development life cycle by “throwing things over the wall”.

Over the years, people understood that this inefficiency is hurting not only the well-being of employees but has a significant impact on business and the value it creates. As a result, the DevOps practice has emerged. DevOps is a mindset, one that eliminates walls between development and operations so software can be built, tested, and deployed faster without involving manual steps. Instead of functioning as separate departments, developers, QA testers, system admins, support staff, and end users work together as a unified team, sharing responsibility for the final product. DevOps is the union of people, process, and technology that enables organizations to develop and release software faster to provide more value to the clients.

Traditionally, development and deployment is highlighted in the software development life cycle. Correspondingly, those activities are reflected in the DevOps name: development (Dev) and operations (Ops). However, security of software has always been important and is getting more and more attention as severe security breaches cost companies millions.

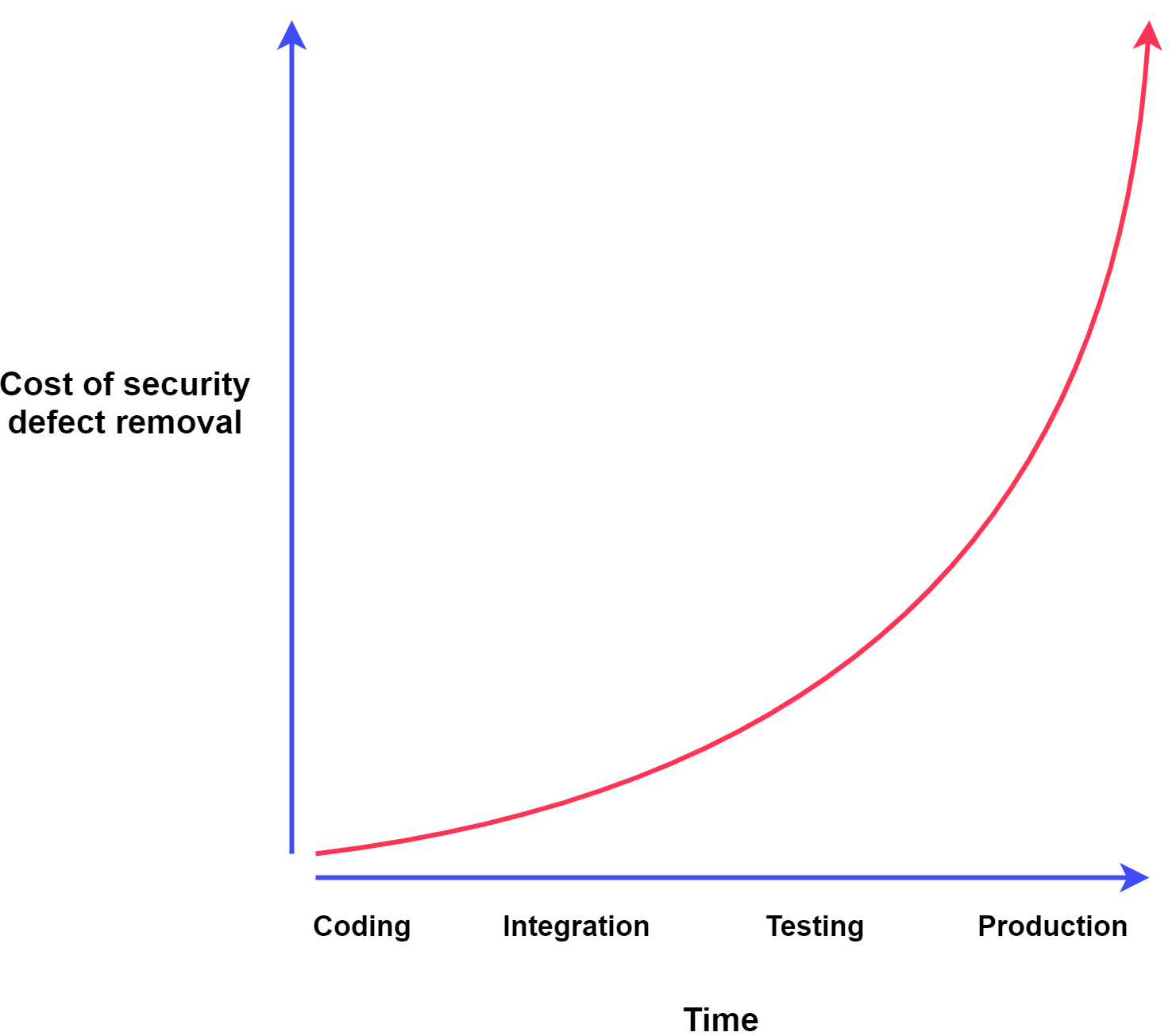

In the traditional software development life cycle, security was done at the end of the cycle: penetration tests and configuration checks were conducted before each release. With DevOps practices applied, the security team cannot keep up with the rapid pace of a highly efficient DevOps team which can deploy several releases a day. Thus, security testing becomes a bottle-neck in the software development life cycle and companies typically accept this bottle-neck or dedicate less time and effort to conduct security testing in order to have more frequent releases.

Security team used to be the last line in the software development. Typically, it would be assigned to test the new version of the application or some infrastructure changes to ensure that it is secure and compliant. Development and operations teams tend to “throw changes over the wall” at the last minute before the deadline. As a result, there is not much time to perform security testing and the security team is held accountable if the deadline is missed. Consequently, the security team feels pressure to balance the level of security and imposed time constraints and that negatively impacts the well-being of its personnel.

However, there is another choice - to adopt the DevSecOps practice. DevSecOps shifts security left and enhances the traditional software development life cycle and security as the whole by integrating security at every phase of the life cycle - from the initial design, implementation, testing and deployment.

DevSecOps model puts together people (development, security and operations), process and technology to ensure that:

- Organization focuses on providing secure software and services.

- Organization saves cost and time by detecting and fixing security issues as early as possible in the life cycle.

- Teams collaborate efficiently and have mutual understanding on how to ensure that the produced software is secure.

- The practice of “throwing things over the wall” is intolerable and every team member is responsible for the whole software development life cycle.

- Automation is in-place to prevent human-errors and to reduce cost as well as the amount of mundane tasks.

How to do DevSecOps

Once we understand what DevSecOps is and what advantages it brings to the organization, we need to consider how to implement this practice.

DevSecOps stands on the two main pillars: automation and culture. Both of these elements are mandatory to fully implement this practice and take advantage of its benefits.

The first pillar is automation. DevOps practice takes advantage of powerful tooling to automate and speed up the software delivery. The same principle can be applied to security activities as well. Tools enable teams to automate the repeatedly performed tasks and allow them to take on more meaningful activities. For example, a security team can automate certain penetration tests and focus more on threat modeling activities.

One of the most known DevOps practices is Infrastructure as Code. Similarly, DevSecOps introduces Security as Code practice. This practice ensures that:

- Security controls are versioned, because they are stored within the version control system.

- There is a mutual acceptance of changes, because peer reviews can be conducted.

- Accountability is present, because it is clear who and when made the certain changes.

- The risk of human-error is reduced, because the process of applying security controls is automated.

- Consistency is ensured and configuration drift is eliminated, because the same code is applied to different environments.

- Higher level of confidence is achieved, because as any code, it can be tested to verify its correctness.

The second pillar of DevSecOps is culture shift. Tooling and automation alone will not be sufficient to ensure efficient security incorporation into the software development life cycle. The way people cooperate and work together is key to ensure a smooth process. In order to instil DevSecOps culture, organization has to:

- Encourage a security mindset between all individuals. All personnel (development, operations and security) as a whole is responsible for ensuring security.

- Educate people. Security experts should conduct regular knowledge sharing sessions, help to configure the tooling, and promote the best practices.

- Encourage continuous feedback and improvement. Every individual has a word and can promote improvements.

- Foster communication between individuals. Open and timely communication is the key to overcoming issues and blockers.

An organization that deploys proper tooling and fosters culture shift successfully applies DevSecOps practice and takes advantage of its benefits.

Automation

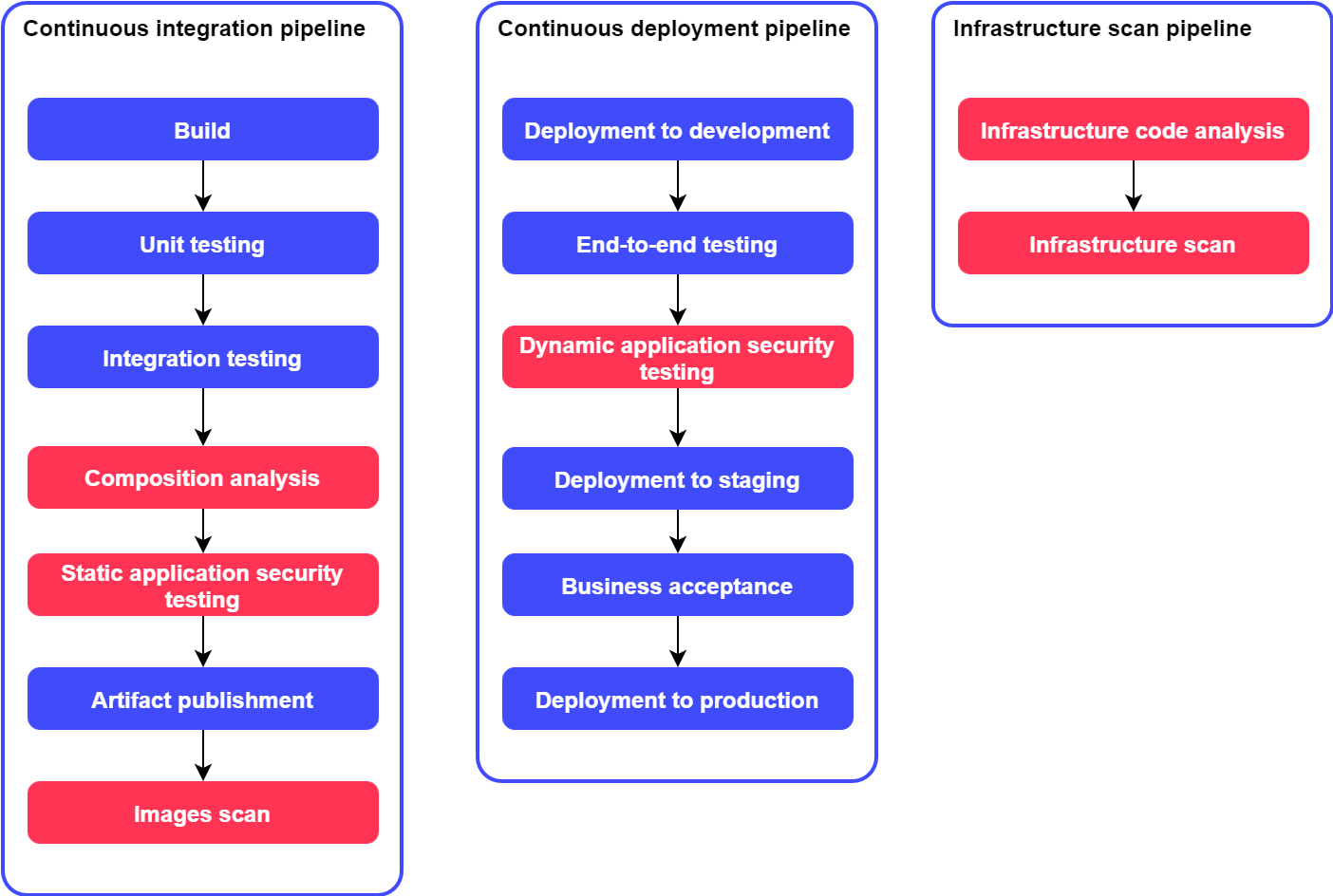

DevSecOps uses dedicated tooling to perform a variety of security checks. DevSecOps enhances traditional DevOps Continuous integration and Continuous deployment pipelines to ensure that the produced software has a minimal amount of security vulnerabilities. Continuous integration pipeline is extended with “Composition analysis”, “Static application security testing” and “Images scan” steps. Continuous deployment pipeline is extended with the “Dynamic application security testing” step.

Finally, DevSecOps introduces an additional Infrastructure scan pipeline which is used to scan the infrastructure in which the software runs. This pipeline contains “Infrastructure code analysis” and “Infrastructure scan” steps.

It is important to emphasize that DevSecOps pipelines do not cover all the needed activities and tests in order to ensure that the produced software is secure. Other security related activities that are not covered in the pipelines, such as threat modeling and in-depth penetration testing, must also be completed on a regular basis.

Pre-commit hooks

A general practice of software development is to store all the source code in a version control system which acts as a central source-of-truth for a project. Version control system provides repositories that allow individuals to contribute changes to the source code and retrieve changes made by the others. As data stored in repositories can be accessed by a number of people (or even everyone when repositories are public), no sensitive data should be stored there. Passwords, API keys, cryptography secrets are types of data that mustn’t be stored within the version control system.

There were a number of publicly disclosed security breaches through leaked credentials found in Github repositories and incidents when leaked AWS keys were used to access protected resources.

It is relatively easy to accidentally commit sensitive data to the version control system. Removing committed data requires additional effort because version control systems track all changes - including the code that was removed.

The best way to protect against this type of data exposure is to prevent committing sensitive data. Version control systems provide hooks that run custom scripts when certain actions are executed. Pre-commit hook is the most useful for this case. When certain changes are about to be committed, a pre-commit hook is run. This hook then can analyze what changes are being committed. If any sensitive data is about to be committed, the hook can reject such a commit and warn users that sensitive data mustn’t be committed.

Talisman is an example of pre-commit or pre-push hook used for Git repositories that validates the outgoing changes for things that look suspicious - such as authorization tokens and private keys.

$ git push

Talisman Report:

+-----------------+-------------------------------------------------------------------------------+

| FILE | ERRORS |

+-----------------+-------------------------------------------------------------------------------+

| secret.pem | The file name "danger.pem" |

| | failed checks against the |

| | pattern ^.+\.pem$ |

+-----------------+-------------------------------------------------------------------------------+

| secret.pem | Expected file to not to contain hex encoded texts such as: |

| | awsSecretKey=someSensitiveSecret |

+-----------------+-------------------------------------------------------------------------------+Existing repositories can be scanned for existing secrets using the gitleaks tool.

Software composition analysis

When software is created, developers write only a small amount of the whole source code that is executed during the run-time because a number of additional third-party libraries are used. Those libraries provide certain reusable functionality that developers do not have to implement over and over again. Composing software from reusable code speeds up the development and allows to deliver business value faster.

However, as for any code, those libraries can contain security vulnerabilities as well. Once those vulnerabilities are exploited, functionality of systems is disrupted and that could lead to security breaches. The usage of third-party libraries provides a lot of benefits, but also causes additional security risks. Software developers rarely analyze what third-party libraries and their versions are used within the project. Once a library is introduced to the project, most times it is not reviewed for new publicly disclosed vulnerabilities and available security improvements.

One of the most renowned example of usage of vulnerable components is CVE-2017-5638 Apache Struts 2 framework vulnerability, which led to the Equifax security breach that impacted 147 million consumers. “Netmask” npm module was reported to have a Server-side request forgery vulnerability. This library provides functionality to parse IPv4 CIDR blocks and is transitively used in over 278,000 other libraries that became vulnerable respectively. Another type of vulnerability is when a malicious library having a similar or mistyped name is published and may accidentally be introduced to the code-base.

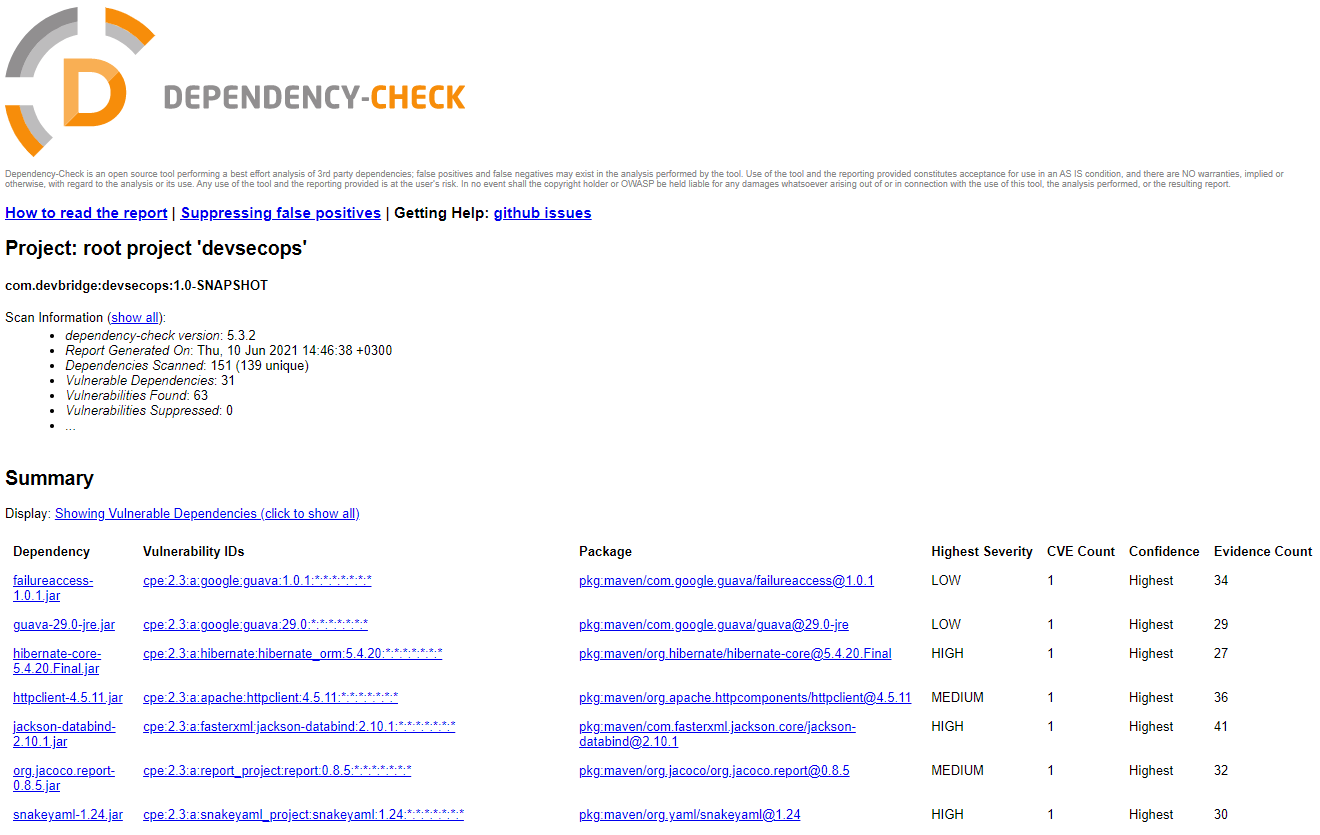

The risk of usage of third-party components is so important that it is included in the OWASP Top 10 Web Application Security Risks 2017. Security experts suggest that the most efficient way to mitigate this risk is to conduct continuous analysis of third-party libraries alongside their versions used in the code-base and check whether there are any publicly disclosed vulnerabilities for them.

Analysis of project dependencies should be done every time an application is built. If any high severity vulnerability is found within third-party libraries, the impact and remediation must be evaluated:

- If a vulnerable library has a security patch - a new patched version should be introduced.

- If a library is used for the first time - consider removing it from the code-base.

- If a vulnerable library does not have a security patch - evaluate the risk and apply other remediations to reduce it.

OWASP provides a Dependency-Check tool that attempts to detect publicly disclosed vulnerabilities contained within a project’s third-party libraries. It does this by determining if there is a Common Platform Enumeration (CPE) identifier for a given dependency. If found, it will generate a report linking to the associated CVE entries. OWASP Dependency-Check tool primarily supports Java and .NET packaging tools. For the npm ecosystem, npm build tool has built-in functionality to analyze dependencies. “npm audit” command can be used to analyze dependencies against publicly disclosed vulnerabilities.

There are also some commercial tools used to conduct software composition analysis. One of them is Snyk. Snyk supports a variety of dependency management tools, has an ability to automatically create pull requests to update vulnerable versions and provides a web interface with powerful reporting capabilities.

Static application security testing (SAST)

Static application security testing (SAST) is a testing methodology that analyzes source code to find security vulnerabilities. As SAST can analyze software implementation and all the different execution paths, this type of testing allows detection of the most prevalent vulnerabilities - buffer overflows, SQL injections, cross-site scripting and the usage of insecure cryptographic methods.

Static application security testing can be conducted manually during formal code reviews. However, relying only on human review is error-prone and is not the most efficient way to do it. Dedicated tooling can be used to assist to conduct static application security testing. Most common and obvious issues can be detected by the tooling and people can focus on more complex and composite issues. Vulnerabilities, detected by the tools, usually have severity level, explanation of the issue, links to references and suggestions on how to fix it.

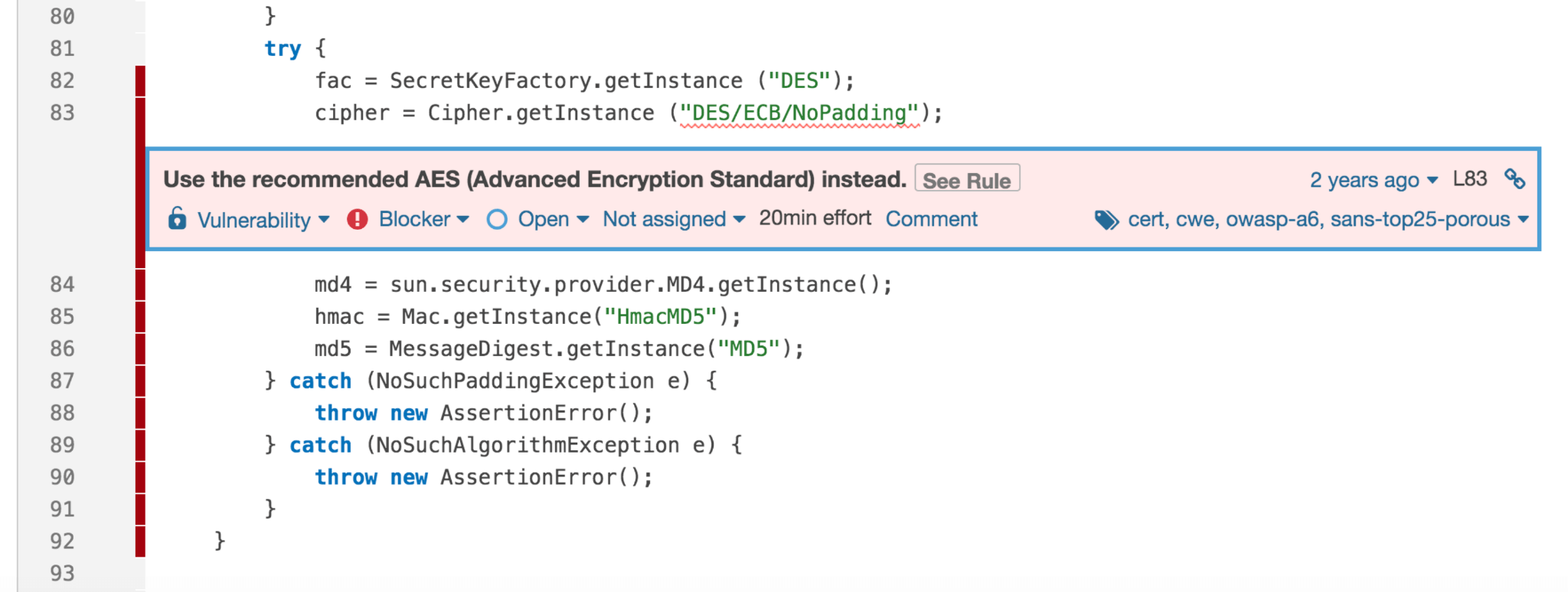

The most popular SAST tool is SonarQube. This tool can analyze Java, PHP, C#, C, C++, Python and JavaScript/TypeScript code against a number of security vulnerabilities. SonarQube presents not only security vulnerabilities but security hotspots as well. Security hotspots are uses of security-sensitive code. They might be valid but human review and sign-off is required to close a hotspot.

SonarQube uses Quality Gates that are added to the continuous integration pipeline. Quality Gates enforces a quality policy by answering one question - is the project ready for release? Quality Gates encompases general metrics such as code coverage, code duplication and security specific amount and severities of detected vulnerabilities. If the detected amount/severity is above the Quality Gate threshold - the SAST step exits with an error code and the whole continuous integration pipeline fails as a consequence.

Images scan

Containerization allows to pack software artifacts and all the needed dependencies into a single standardized unit that can be run everywhere. The popularity of containers is ever increasing, because they allow smoother deployment due to the fact that the container includes all the needed runtime environment - application server, configuration, etc. In order to create a container, an image that serves as a template is needed.

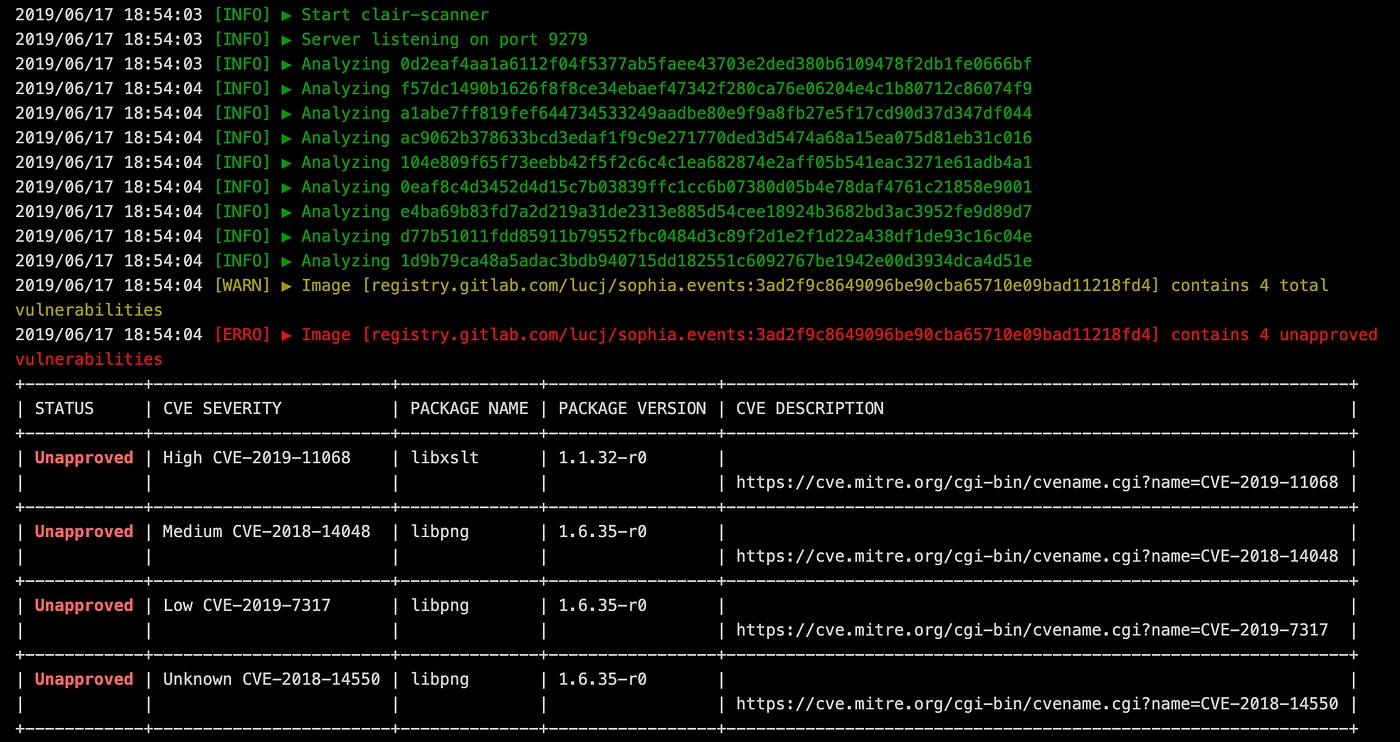

As for any software runtime environment, containers can contain vulnerabilities as well. For example, a base image that contains an application server, can have some publicly disclosed vulnerabilities (CVEs). Moreover, just like for third-party libraries, there is a risk of using potentially insecure non-official images that have similar or mistyped names. In consequence, software using vulnerable base images is transitively vulnerable as well. As a result, there is a need to analyze images to detect any vulnerabilities within them. There are a number of tools and services to do that.

Docker is one of the most commonly used containerization platform. It provides a built-in Docker Scout functionality to scan locally built image or images pushed to Docker Hub.

If Amazon Elastic Container Registry is used to store images, then this service can also be used to scan them on push.

Finally, an open source tool called Clair provides the ability to scan OCI and Docker images against known vulnerabilities.

Dynamic application security testing (DAST)

Static application security testing can detect many security vulnerabilities, but it cannot detect run-time and environment related issues. For that reason, dynamic application security testing is used to detect such issues. This type of testing is based on black-box model where analysis is done against the working software and no knowledge of the underlying implementation is present.

Dynamic application security testing is similar to penetration testing, because it represents the hacker approach for attacking the working application in its execution environment. Consequently, this type of testing requires solid understanding of how the application works and how it is used. Dynamic application security testing includes a variety of checks to verify that the software is resistant to various vulnerabilities. For example, fuzzing HTTP request parameters for SQL injections and analyzing HTTP response headers for misconfiguration.

There are a number of tools used to conduct dynamic application security testing and OWASP ZAP with Nikto are the popular ones.

OWASP Zed Attack Proxy (ZAP) is a web app scanner and one of the most popular OWASP projects actively maintained by an international team of volunteers. Penetration testers use ZAP as a proxy server which allows to manipulate all the traffic that passes through it. It also provides automated scan functionality where a URL of a web application is entered and then ZAP proceeds to crawl the web application with its spider and passively scan each page it finds. Then ZAP uses the active scanner to attack all the discovered pages, functionality and parameters. ZAP will analyze HTTP responses to find any vulnerabilities and misconfigurations, for example insecure “Content Security Policy” or “Cross-Origin Resource Sharing”. When the scan finishes, the tool provides a list of detected vulnerabilities alongside their severities and additional information.

Another tool used to conduct DAST is Nikto. It is a command-line based web server scanner. Similarly to OWASP ZAP, this tool accepts a web application URL and then starts the scan using different attack vectors - information disclosure, injections, command execution. Upon completion, Nikto provides a list of all detected vulnerabilities and additional information about them.

nikto -host devsecops.local -port 8080 -Plugins "@@DEFAULT;-sitefiles"

Nikto v2.1.6

---------------------------------------------------------------------------

+ Target IP: 127.0.0.1

+ Target Hostname: devsecops.local

+ Target Port: 8080

---------------------------------------------------------------------------

+ Start Time: 2020-12-05 18:13:20 (GMT2)

---------------------------------------------------------------------------

+ Server: nginx/1.17.10

+ X-XSS-Protection header has been set to disable XSS Protection. There is unlikely to be a good reason for this.

+ Uncommon header 'x-dns-prefetch-control' found, with contents: off

+ Expect-CT is not enforced, upon receiving an invalid Certificate Transparency Log, the connection will not be

dropped.

+ No CGI Directories found (use '-C all' to force check all possible dirs)

+ The Content-Encoding header is set to "deflate" this may mean that the server is vulnerable to the BREACH attack.

+ Retrieved x-powered-by header: Express

+ /config/: Configuration information may be available remotely.

+ OSVDB-3092: /home/: This might be interesting...

+ 7583 requests: 0 error(s) and 7 item(s) reported on remote host

+ End Time: 2020-12-05 18:16:32 (GMT2) (192 seconds)

---------------------------------------------------------------------------

+ 1 host(s) testedInfrastructure code analysis

One of the essential practices in DevOps is infrastructure as code. The goal of this practice is to store all the needed logic and scripts used to provision software run-time environments as code in the version control system. Storing infrastructure as code provides a number of benefits:

- Cost reduction - the same code can be used to swiftly provision a number of different environments.

- Consistency - environment drift is eliminated.

- Accountability - all the changes are tracked and must be reviewed/approved by peers.

As any code, infrastructure code can also be analyzed against different rules. Those rules can be as simple as naming conventions or more advanced ones to detect security issues. By conducting such analysis, a number of vulnerabilities could be detected:

- Exposed server ports.

- Publicly accessible databases or files.

- Hard-coded secrets.

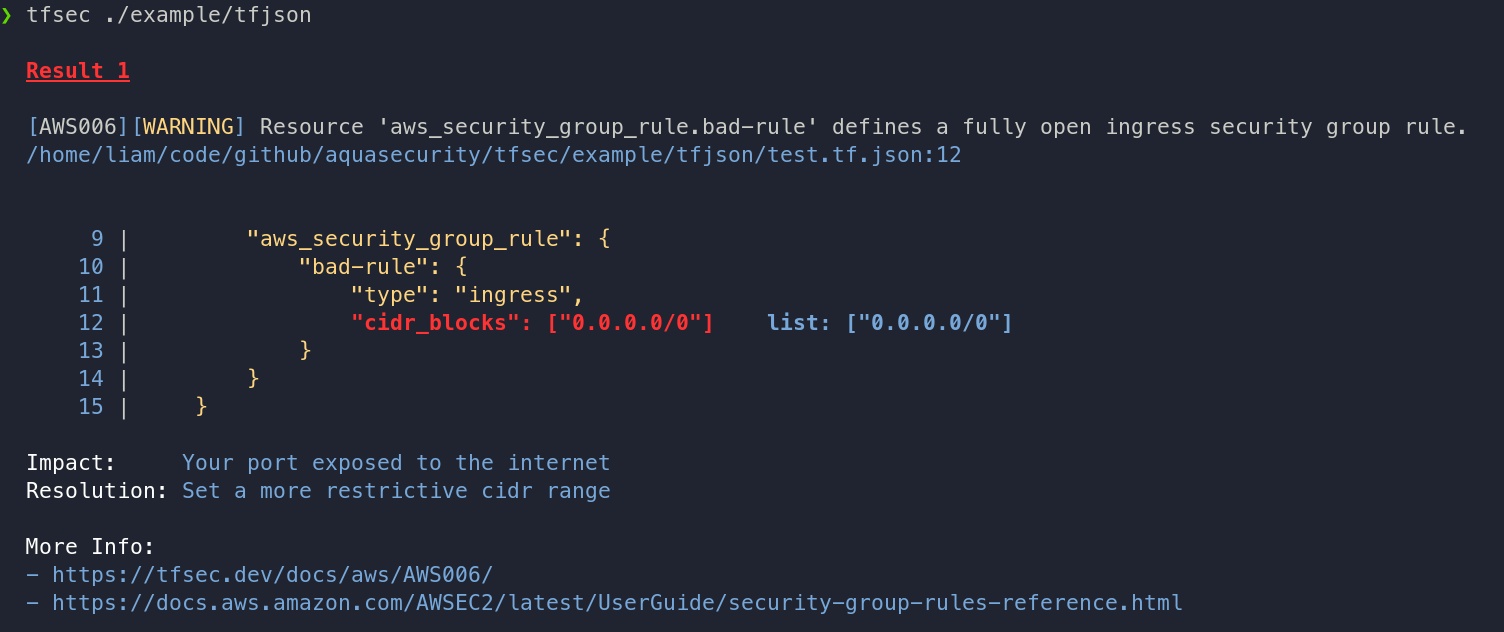

One of the most popular infrastructure as code tool is Terraform. Terraform provides the ability to declaratively specify what cloud resources are needed, the parameters and relationships between them. When the resources are described, the tool then executes API requests to create/modify/destroy the specified resources within a number of supported cloud providers (e.g. AWS, Azure, GCP).

In order to ensure that Terraform scripts are secure and do not contain any potential security issues, tfsec tool can be used. This tool checks for sensitive data inclusion and for violations of best practice recommendations across all major cloud providers. For example, it can detect:

- AWS S3 buckets with public ACLs.

- Publicly accessible AWS RDS databases.

- Usage of passwords authentication on Azure virtual machines.

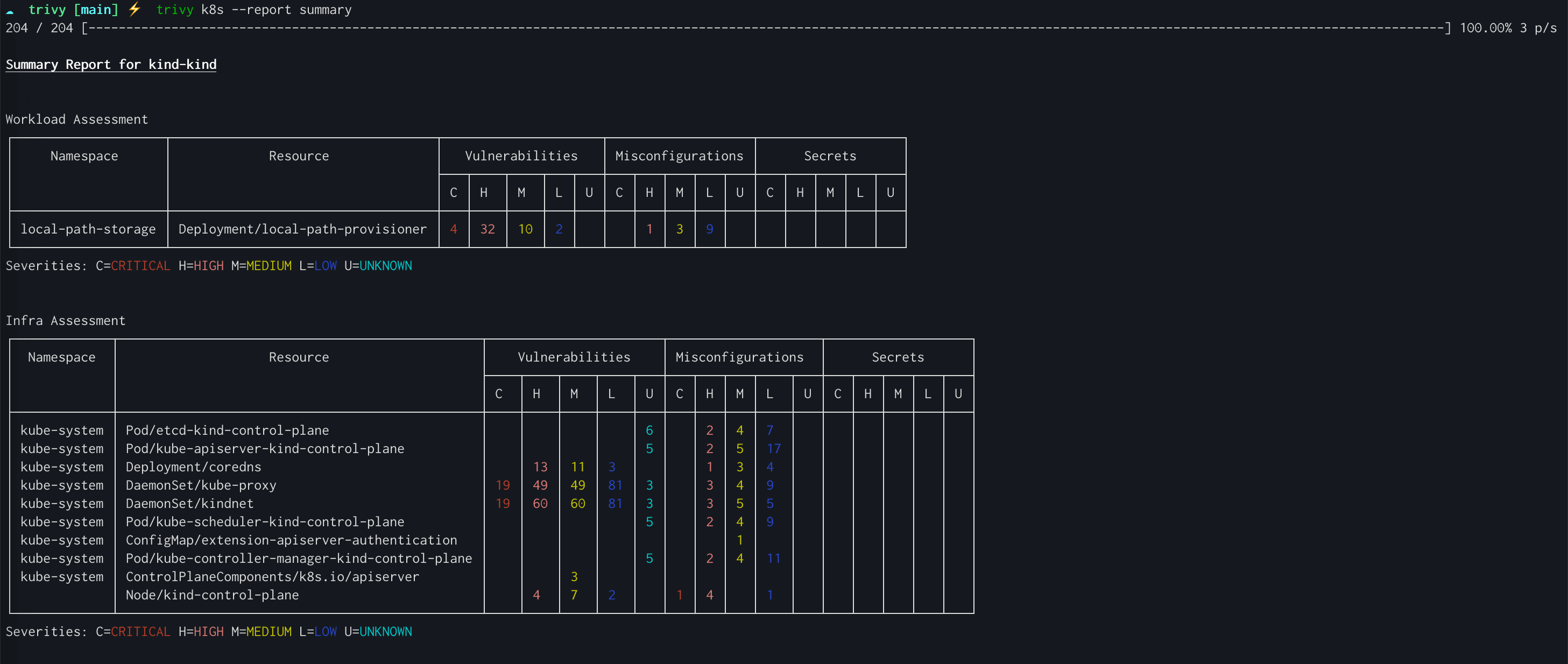

Another tool, called Trivy can find vulnerabilities, misconfigurations, secrets, SBOM in containers, Kubernetes, code repositories, clouds and more.

Infrastructure scan

Infrastructure as code analysis can be considered a static part of infrastructure testing because it analyzes only the code used to create the infrastructure and not the actual running infrastructure. As a result, there is a need to conduct dynamic infrastructure testing against the running infrastructure. For certain sectors, the requirements for infrastructure compliance and auditing are very high. The requirements can be internal (e.g. identified by the Chief Information Officer) or external (e.g. PCI DSS for financial organizations or HIPAA for health institutions). Typically, compliance testing and auditing are done manually and are time-consuming.

Just as any security testing, it can be automated to make the process more robust and less time-consuming. A suite of automated infrastructure compliance and auditing tests can be run after making changes to the infrastructure, regularly or on auditors request.

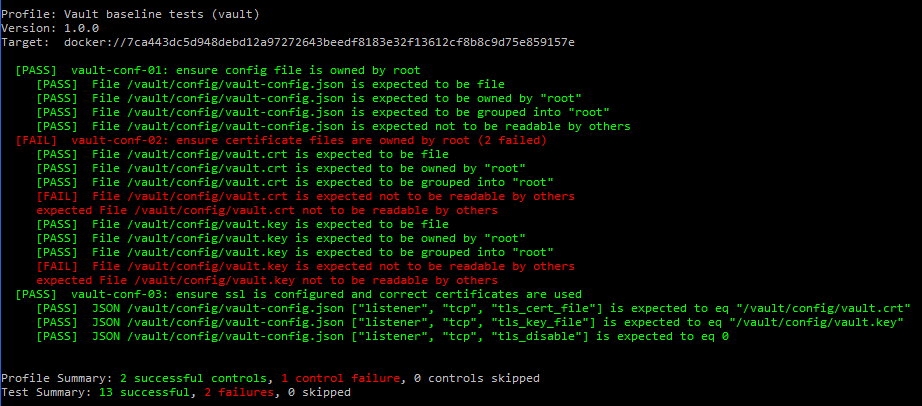

One of the most commonly used tools to conduct infrastructure testing is Chef InSpec. Chef InSpec is an open-source framework for testing and auditing applications and infrastructure. Chef InSpec works by comparing the actual state of the system with the desired state that is expressed in easy-to-read and easy-to-write Chef InSpec code.

Chef InSpec verifies the desired state by using SSH or WinRM to connect to remote machines or can integrate with different cloud providers to audit the resources deployed there. The tool can currently integrate with AWS, GCP and Azure cloud platforms. Once Chef InSpec completes the analysis, it provides a report with all detected violations.

Chef InSpec supports manual creation of tests which is useful when internal requirements are imposed and need to be automated. There is also Chef Supermarket which contains a number of tests created by other people. Finally, there is DevSec Hardening Framework which provides a set of predefined controls called profiles for different applications and services. For example:

- MySQL and PostgreSQL databases - e.g. ensuring that the default passwords are changed.

- Apache and Nginx web servers - e.g. ensuring that workers run as non-privileged users.

- SSH and Docker services - e.g. ensuring correct file system permissions.

DevSec Hardening Framework provides profiles to detect violations as well as the resources for infrastructure as code tools to resolve them. The framework currently provides:

- Ansible Remediation Role

- Chef Remediation Cookbook

- Puppet Remediation Module

Vulnerability management

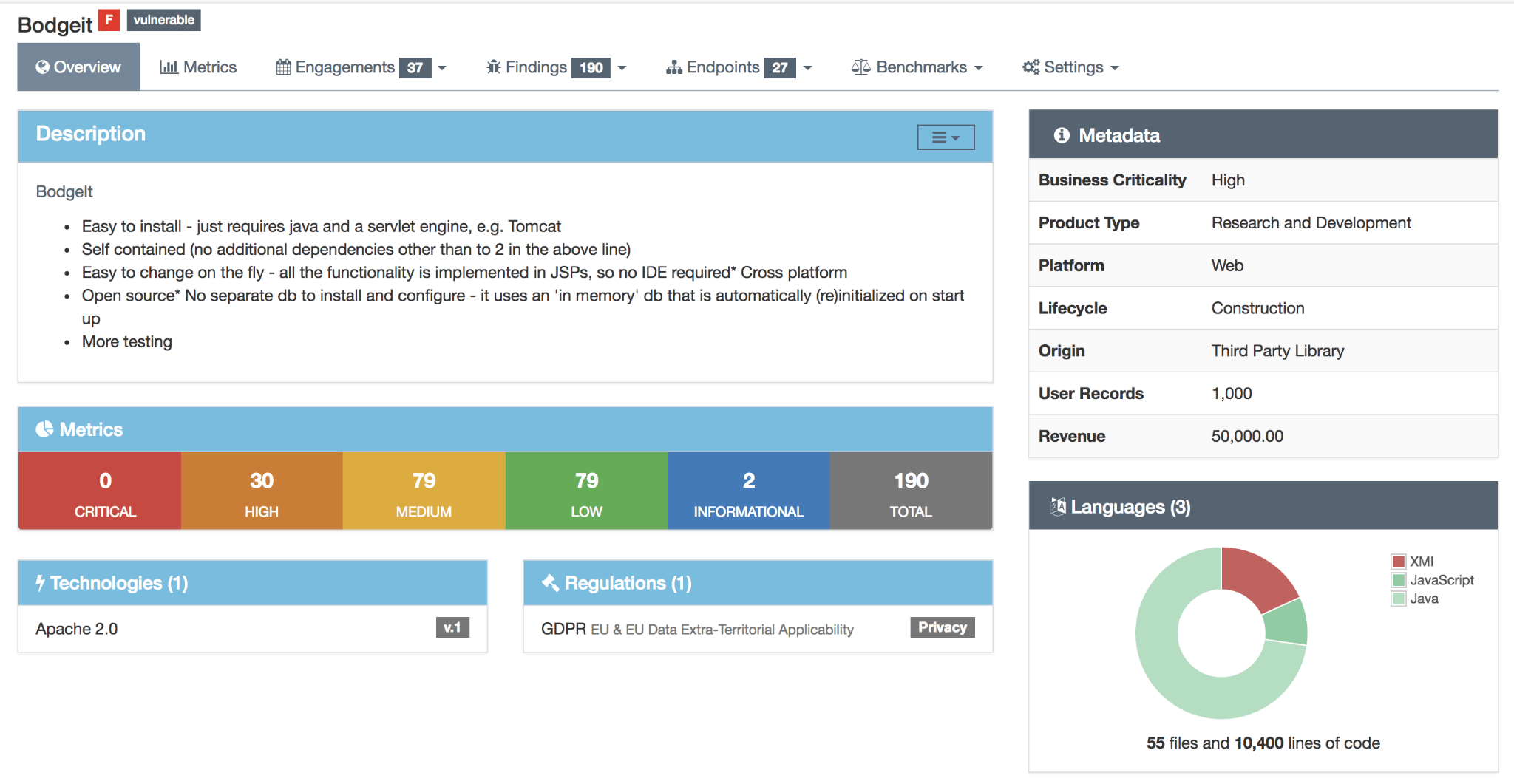

Vulnerability management is not a separate step in the DevSecOps pipeline but combines results from all the other steps. Tools report a number of different vulnerabilities and it becomes easy to lose track of them. Ideally, it is preferable to have a centralized place to store all the reported vulnerabilities. Having a single set of all detected vulnerabilities would allow to:

- Know the overall status of security - how many vulnerabilities are present.

- Prioritize the order in which vulnerabilities will be remediated.

- Track historical data and tendencies.

One of the tools used to manage vulnerabilities is DefectDojo. DefectDojo allows to manage application security program, maintain product and application information, triage vulnerabilities and push findings into defect trackers (e.g. JIRA). It consolidates findings from different security tools (over 85 scanner formats are supported) into one source of truth.

There are other vulnerability management systems that can fulfil the organization needs:

- OpenVAS - a free software framework of several services and tools offering vulnerability scanning and vulnerability management.

- Nessus - a commercial tool to conduct in-depth perimeter scans, penetration tests, compliance checks and to manage identified vulnerabilities.

- Faraday - a commercial tool that integrates vulnerability data from over 80 security tools, provides custom reporting templates and has integration with ticketing systems (e.g. JIRA, ServiceNow).

Additional things to consider

When building the DevSecOps pipeline, certain aspects should be considered to ensure the quality of the pipeline:

- Tools used within the pipeline must have a command-line interface. If tools do not have a command-line interface, then it will be problematic to run them in automation servers.

- Tools must provide machine-parsable output (e.g. XML, JSON). This is needed so that the other tools could parse and use the results (e.g. vulnerability management system).

- There is a need to mark and resolve false negatives/false positives. There could be a number of invalid detections so human personnel should be able to review and reject such findings to ensure that only relevant information is reported.

- There is a need to have thresholds to specify when the specific pipeline step should fail. It is unrealistic to expect that the pipeline should fail when a single minor issue is reported. Organizations should take into consideration the requirements imposed on their domains and set realistic thresholds. For example, no more than 5 vulnerabilities in total and not a single critical severity vulnerability.

- Pipeline shouldn’t take too long. Aim to find an optimal set of tools and remove any redundancies.

- Pipelines should be parameterized in order to specify the needed level of detail in a particular scan.

- Pipelines can be optimized to skip certain steps. For example, software composition analysis can be conducted only when software dependencies are changed. However, a full non-optimized pipeline must run periodically as well.

- When choosing a tool, verify licensing limitations. Sometimes there could be restrictions on parallel scans or threads that could impact the cost at scale. Consider using a number of high-quality free and open source tools.

Conclusion

- DevSecOps shifts security left and enhances the traditional software development life cycle and security as the whole by integrating security at every phase of the life cycle.

- DevSecOps emphasizes the cultural change towards a shared responsibility for security and takes advantage of tooling to automate the process of security testing.

- DevSecOps enhances the traditional DevOps pipelines with security specific steps and introduces an additional infrastructure scan pipeline.

- Security related activities that are not included in the pipelines (e.g. threat modeling) must also be conducted separately on a regular basis.

- Introduction of pre-commit hooks reduces the risk of accidentally storing sensitive data in version control systems.

- Continuous analysis of the software composition reduces the risk of including security vulnerabilities via third-party libraries.

- Static application security testing allows the detection of the most common security issues by conducting code analysis.

- Dynamic application security testing mimics the hacker approach for attacking the running software in its execution environment.

- Analysis of infrastructure code allows the detection of infrastructure specific security issues before they even become present in the actual environment.

- Infrastructure testing ensures that the running infrastructure is configured properly and meets the imposed compliance requirements.

- Finally, all the reported security issues are present within the vulnerability management system and personnel can use that information to overview the current status of security, prioritize the remediation of the issues and track historical data.