Agentic AI Beyond the Hype: A Practical Perspective

Introduction

Ah, another AI blog post! With the flood of hype, ads, and grandiose announcements, it’s no wonder people are rolling their eyes and feeling skeptical. Research backs this up too, highlighting that a staggering number of AI initiatives are failing:

- MIT report: 95% of generative AI pilots at companies are failing

- Why 51% of Engineering Leaders Believe AI Is Impacting the Industry Negatively

When AI made a splash with the arrival of ChatGPT, I’ll admit I was a bit of a skeptic myself. Like a cautious cat peering into a box, I took my time to understand the nuts and bolts of AI before diving in headfirst. But understanding AI goes beyond just grappling with machine learning, neural networks, and large language models (LLMs). It’s also about seeing how AI can be integrated into critical areas like:

- Architecture

- Quality

- Security

- Cost

In this post, I’ll share my insights from examining these fields, particularly focusing on a hot new topic: agentic AI.

What Is Agentic AI?

Let’s begin with a solid definition of agentic AI. Major companies have their own takes on it and I like AWS’s definition:

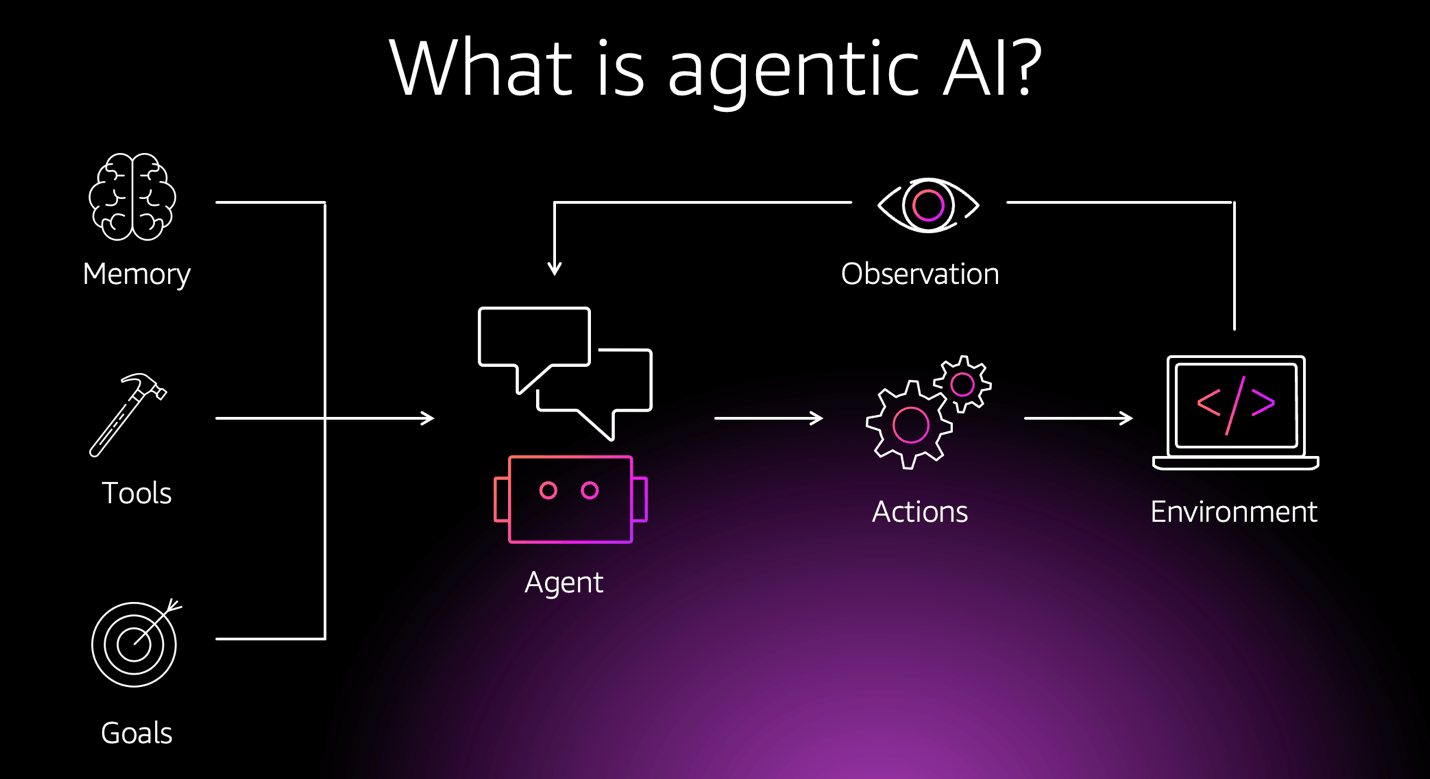

Agentic AI is an autonomous AI system that can act independently to achieve pre-determined goals. Traditional software follows pre-defined rules, and traditional artificial intelligence also requires prompting and step-by-step guidance. However, agentic AI is proactive and can perform complex tasks without constant human oversight. “Agentic” indicates agency — the ability of these systems to act independently, but in a goal-driven manner. AI agents can communicate with each other and other software systems to automate existing business processes. But beyond static automation, they make independent contextual decisions. They learn from their environment and adapt to changing conditions, enabling them to perform sophisticated workflows with accuracy. - AWS, 2025

Let’s break this down and compare it to traditional generative AI, which most of us are already familiar with.

- Autonomy: With generative AI, our workflow begins by opening a prompt window, typing in a request, submitting it, and retrieving a response - then iterating until we achieve the desired result, which we can use in various contexts. In contrast, agentic AI goes beyond this. An agentic system can execute workflows with minimal to no user interaction. These workflows can be triggered at scheduled times or by specific events. For instance, an agentic system can automatically conduct sentiment analysis as soon as an order comment is submitted. Think of it as the difference between driving a car yourself versus using a self-driving car that can navigate and make decisions independently based on traffic conditions.

- Action: While generative AI responds to prompts in a request-response loop, agentic AI can take actions like browsing the internet for the latest information or even placing online orders for you. It’s like having a personal assistant who not only fetches your coffee but also knows when you need it without being reminded.

- Communication: With generative AI, the interaction is typically a two-player game: you and a single LLM instance. Agentic AI, on the other hand, involves a vibrant network of multiple systems (AI and non-AI) communicating collaboratively to reach the goals set by users. It’s as though you’ve gone from playing chess solo to engaging in a whole team strategy game.

This shows us that agentic AI is the next step, offering more capabilities compared to generative AI. And those automated AI components? They’re called agents that we’ll explore next.

What Is an Agent?

Let’s define what an agent is (using AWS’s definition again, of course):

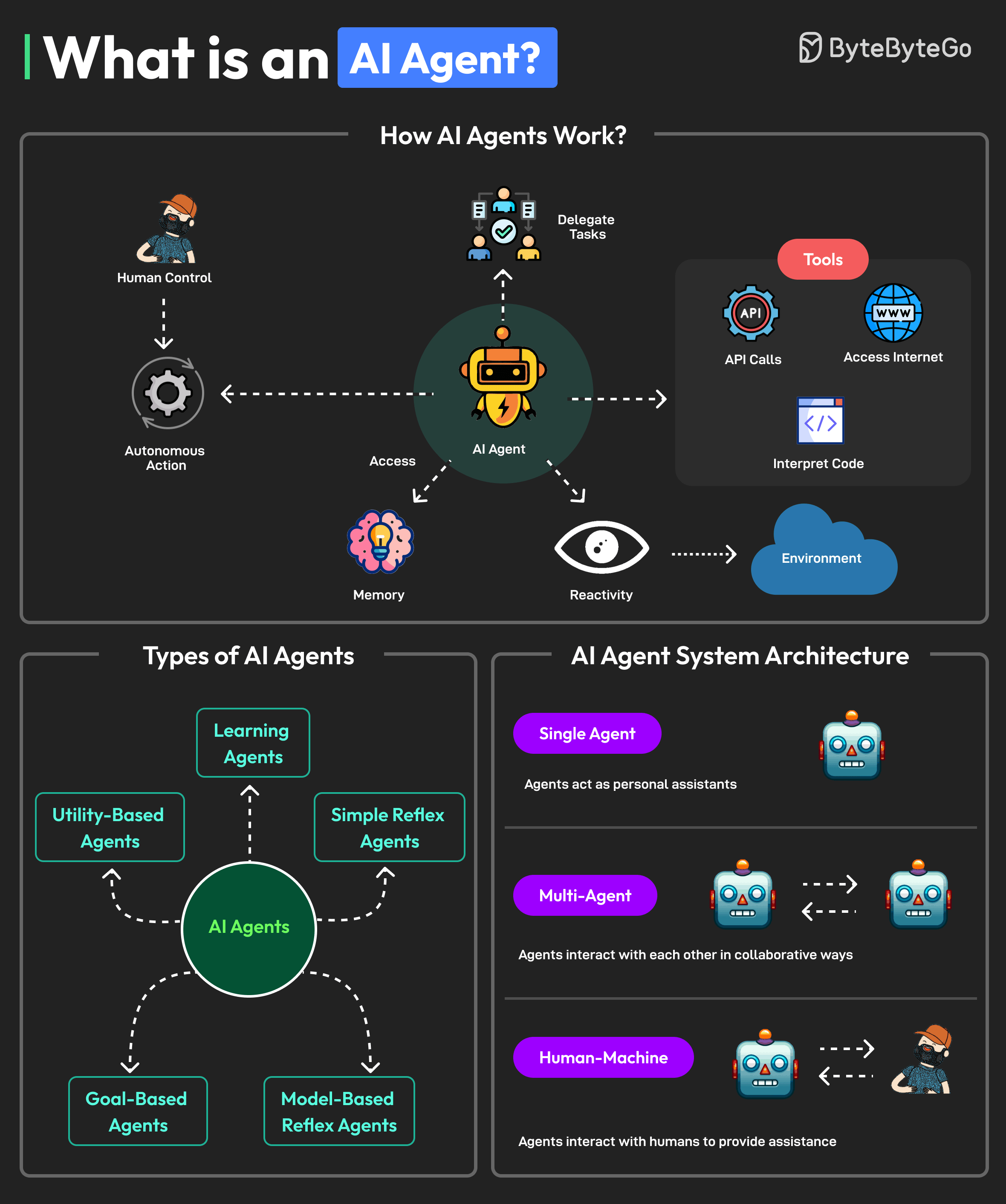

An artificial intelligence (AI) agent is a software program that can interact with its environment, collect data, and use that data to perform self-directed tasks that meet predetermined goals. Humans set goals, but an AI agent independently chooses the best actions it needs to perform to achieve those goals. For example, consider a contact center AI agent that wants to resolve customer queries. The agent will automatically ask the customer different questions, look up information in internal documents, and respond with a solution. Based on the customer responses, it determines if it can resolve the query itself or pass it on to a human. - AWS, 2025

An AI agent is essentially a self-sufficient software program that can interact with its environment and take action. It’s several levels more sophisticated than simply chatting with ChatGPT. Now, when multiple AI agents collaborate, we have (drum roll, please) – agentic AI!

Multiple AI agents can collaborate to automate complex workflows and can also be used in agentic ai systems. They exchange data with each other, allowing the entire system to work together to achieve common goals. Individual AI agents can be specialized to perform specific subtasks with accuracy. An orchestrator agent coordinates the activities of different specialist agents to complete larger, more complex tasks - AWS, 2025

Now that we have a high-level grasp of what an agent is, let’s dive deeper into the key components that make up an agent:

- System Prompt: In the realm of prompt engineering, crafting a system prompt is crucial for steering an AI system’s behaviors. When building specialized AI agents, we want them to adopt particular roles and styles of communication. All this is encapsulated in the system prompt, where we prescribe specific instructions for our agent. I’ll provide some prompts in the examples section.

- Examples: Just like humans learn best from examples, so do AI agents. When no examples are given, it’s “zero-shot” prompting - meaning the agent must guess how to operate. Conversely, when we provide one or more examples, it’s “few-shot” prompting. With examples in its toolkit, the agent can combine them with the system prompt to deliver more accurate results and expected behavior.

- Tools: Using tools is a cornerstone of agent functionality. In generative AI, you ask a question and get answers based on the knowledge AI absorbed during training. With agentic AI, agents can retrieve resources or perform actions using tools. For instance, an agent can search Google for you (resource retrieval) or make an API call on your behalf (action using a tool).

- Communication Interface: Since an agent is a software system, it requires some entry point for interaction. These gateways are known as application programming interfaces (APIs). The industry is working towards standardizing these to facilitate seamless integration of agents within digital ecosystem.

- Communication Protocols: Agentic systems involve multiple agents communicating with each other to reach a common goal. A standardized communication protocol is key for such seamless interaction.

- Human Interaction: While agents should be as autonomous as possible, some human interaction is still necessary. An agent might need to ask users clarifying questions or seek explicit approval for critical actions (I doubt you’d want to hand over your bank account details to an agent without a second thought). Thus, a dedicated interface for human input is essential to weave user contributions into workflows.

Now that we’ve covered the fundamentals, we can delve into more technical details about agent implementation. As agents are software programs, we can apply engineering concepts and terminologies akin to those we use for building modern applications.

When designing systems which are made up of smaller independent components, we employ the term microservices. Similarly, an agentic system comprised of smaller independent agents can be called (drum roll) – microagents system.

Microagent

While architecting an agentic system, we could create one large agent, akin to traditional monolithic architectures. However, a smarter approach is to build several smaller microagents that can work together efficiently. Just as you wouldn’t have a single person managing an entire company, having a diverse set of specialists results in greater efficiency and improved outcomes.

While designing microagents, established engineering practices come into play.

Independent SDLC

Each agent must have its own independent Software Development Lifecycle (SDLC). This includes requirement gathering, design, implementation, testing, deployment, and operations. Just as with microservices, each microagent can live its own life, maturing from planning to maintenance.

For example, some simple agents can be developed and released quickly, while more complex agents may need several iterations before they are ready for deployment. Additionally, some agents may be used infrequently and generate less load, whereas others might be heavily utilized, creating significant demand. Since each agent can scale horizontally on its own, optimal operating costs can be maintained.

The topic of the software development lifecycle is extensive, so I won’t dive into all the details here. Instead, think of it as an exercise to explore how these lifecycle phases would function for an agent system.

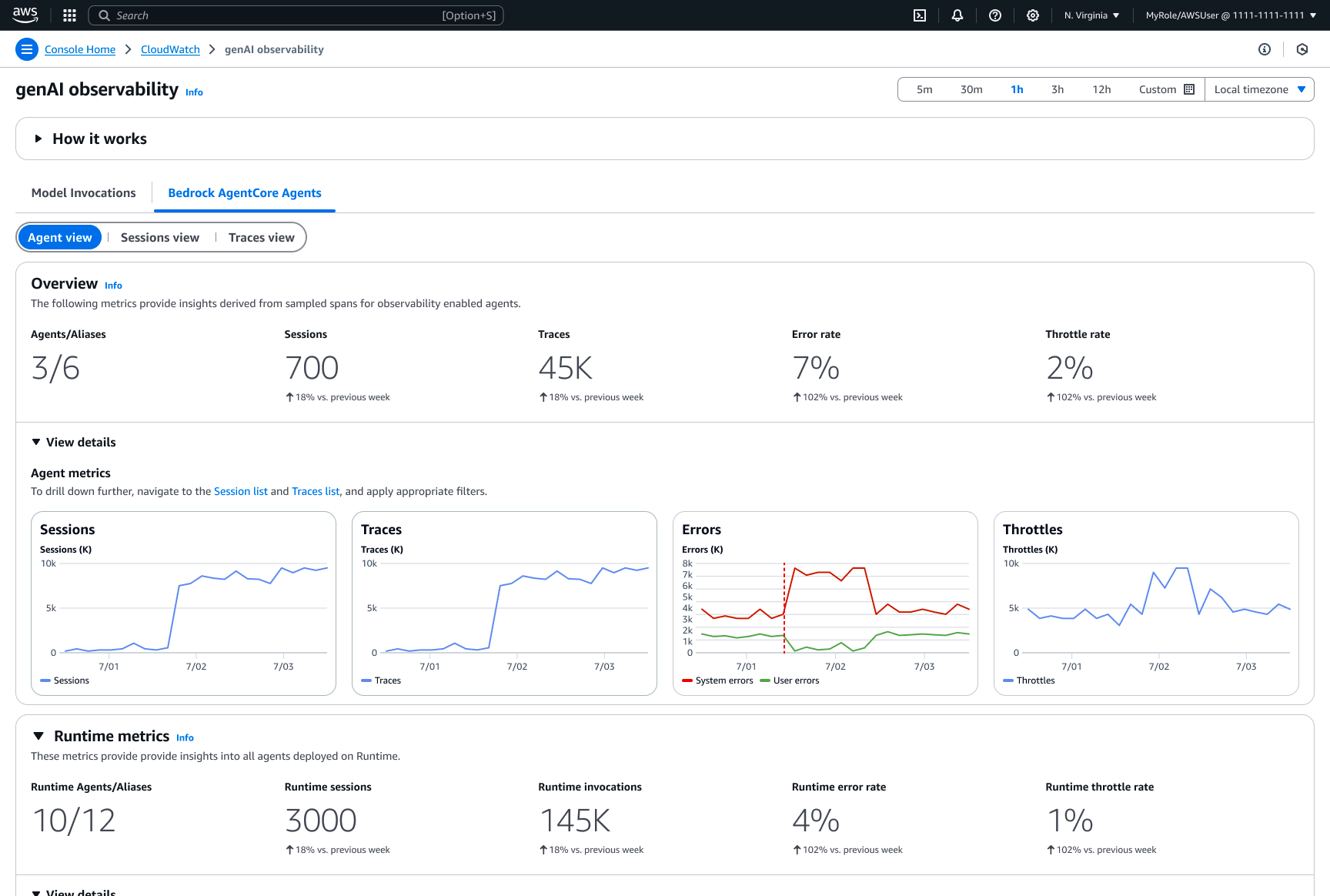

Observability

With any software product we support, we want insight into how well it’s performing. Observability has emerged as a critical practice for monitoring software systems, focusing on three pillars: logs, traces, and metrics.

All three components should be integrated into microagents. Logs capture metadata about requests exchanged between agents and end users. Traces track the execution pathway of each request, indicating what resources and tools were involved. Metrics provide insights into overall agent performance, covering how many requests it completed, how often it provided a successful answer, and how frequently it must respond, “Sorry, I do not know.” These metrics enable performance evaluations and, if necessary, an agent’s dreaded performance improvement plan.

Cost evaluation plays a role here too. If your bills are climbing, observability tools can help identify “spendy” agents. By inspecting token usage and other metrics, you can determine why costs are on the rise.

Communication Patterns

With microservices, we have various communication patterns and protocols to choose from, such as REST APIs, gRPC and asynchronous messaging. For agents, the communication landscape is still evolving. OpenAI has pioneered accessibility with its ChatGPT API, defining endpoints for managing conversations details. Other companies are following suit, often adopting similar APIs to ensure compatibility. Regardless, a microagent must expose a set of endpoints, adhering to an industry-standard OpenAPI definition. (Just try saying “OpenAI API via OpenAPI” five times fast!)

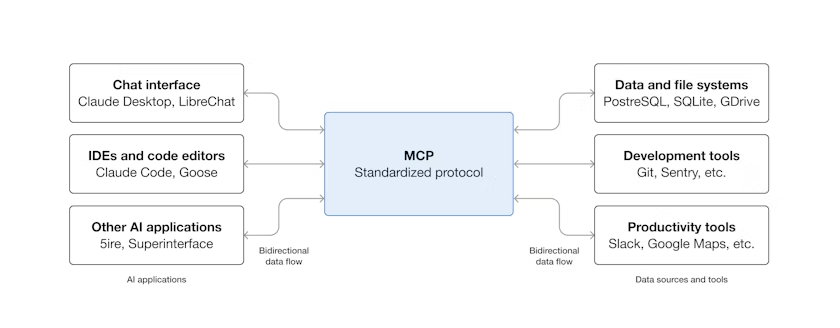

Though the OpenAI API isn’t a formal standard, other protocols are gaining traction for standardization. One such example is the Model Context Protocol (MCP), which is an open-source standard enabling AI applications to connect with external systems. Developed by Anthropic, which owns the Claude LLM models, MCP was swiftly adopted by major AI players, including OpenAI and Google DeepMind.

The official MCP website provides a clear and concise description of the protocol:

Using MCP, AI applications like Claude or ChatGPT can connect to data sources (e.g. local files, databases), tools (e.g. search engines, calculators) and workflows (e.g. specialized prompts)—enabling them to access key information and perform tasks. Think of MCP like a USB-C port for AI applications. Just as USB-C provides a standardized way to connect electronic devices, MCP provides a standardized way to connect AI applications to external systems.

The MCP website also provides a fascinating array of use cases, such as:

- Agents can access your Google Calendar and Notion, acting as a more personalized AI assistant.

- Claude Code can generate an entire web app using a Figma design.

- Enterprise chatbots can connect to multiple databases across an organization, empowering users to analyze data using chat.

- AI models can create 3D designs on Blender and print them out using a 3D printer.

The community is buzzing with excitement, creating MCP wrappers around tools and services, paving the way for agents to incorporate them. A list can be explored here at awesome-mcp-servers.

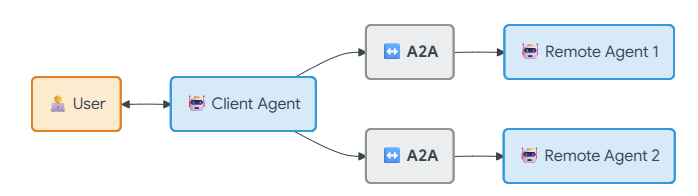

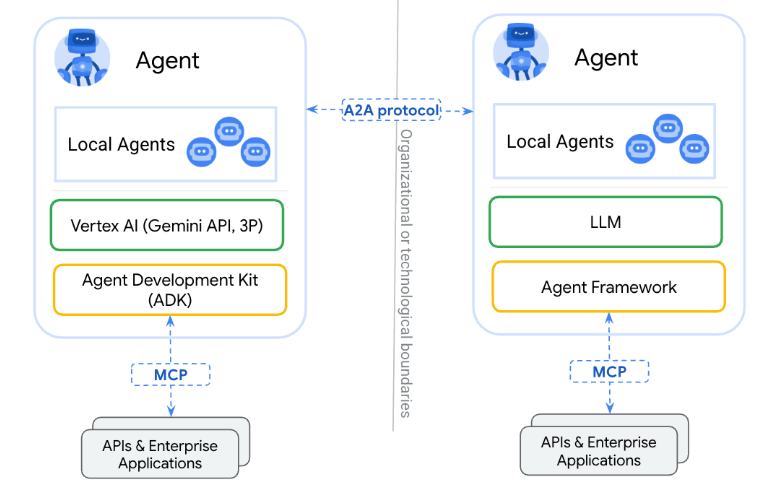

Another rising specification is the A2A Protocol, an open standard developed by Google and donated to the Linux Foundation to allow seamless communication between AI agents. In a world where agents are built across diverse frameworks, A2A creates a common language, breaking down silos and fostering interoperability.

Consider A2A as a set of patterns and APIs guiding agents on how to work together successfully - much like a team lead outlining clear practices for collaboration patterns. Though the A2A protocol is still in its infancy (announced on April 9, 2025), it shows promise, and I encourage you to familiarize yourself with its concepts as it continues to mature.

Testing

In product development, especially with custom software, testing becomes paramount to ensuring high quality. Traditional testing has established methodologies, encompassing automated, manual, security, performance, accessibility, and other testing types. Using the functional testing approach, we often verify predefined requirements.

For example, if we test a calculator to ensure 2 + 3 equals 5, it’s clear when the requirement is satisfied. However, fuzzy logic introduces complexity. If you ask a chatbot, “Who is the current coach of the Kaunas Žalgiris basketball club?” the answer is straightforward to verify (unless the season is wavy). But when posing open-ended questions like “Which basketball club is better - Kauno Žalgiris or Vilniaus Rytas?” the answer is subjective and influenced by many factors (and, yes, I know where my loyalty lies).

New challenges call for new solutions, leading to innovative practices like LLM-as-a-Judge. This evaluation technique employs large models to assess the quality, relevance, and reliability of outputs generated by other models, effectively mimicking human judgment at scale. This approach is invaluable for evaluating subjective tasks, where traditional metrics like ROUGE or BLEU often fall short in capturing deeper meanings. Just as we prefer avoiding manual testing, AI testing also aims for automation in verifying responses. While there are nuances when one AI tests another, it works surprisingly well, with tools like Ragas and DeepEval available for this purpose.

Security

The security of the systems we build and utilize cannot be taken for granted. Significant thought and effort must go into ensuring that these systems can be used securely, and agentic AI is no exception. Just like any other engineering practice, we must not only implement traditional security measures but also devise new strategies to tackle the unique challenges posed by agentic AI.

There are three risky capabilities associated with agentic AI, which are mainly called the lethal trifecta:

- Access to Private Data: When agents are granted access to data, it brings benefits but also raises serious concerns about data classification, access authorization, compliance, and the natural fear that our data may be misused.

- Exposure to Untrusted Content: Foundational LLMs are typically trained on public data, which may not always be accurate. Furthermore, AI systems are prone to hallucinations, meaning that results must always be verified and validated.

- Ability to Communicate Externally: Tools empower agents, but they can also be misused for malicious purposes. Malicious tools can exploit agents to exfiltrate resources or carry out harmful actions using other tools.

Given these considerations, we can develop an actionable risk management plan, create secure practices, and enforce them to prevent security vulnerabilities. Security practices are continually evolving, especially in relation to AI. Consequently, OWASP is working on the OWASP GenAI Security Project, which seeks to provide free, open-source guidance and resources to help understand and mitigate security and safety concerns related to generative AI applications. This project also includes LLM Top 10 which explores the latest Top 10 risks, vulnerabilities and mitigations for developing and securing generative AI and large language model applications across the development, deployment and management lifecycle.

Moreover, we can analyze the traditional OWASP Top 10 project through the lens of AI: for instance, injections can occur via prompts, MCP tools may suffer from broken access control, or our LLM APIs could be prone to security misconfigurations. Like any technology, there are inherent security risks, but it is our responsibility to address and mitigate these risks with appropriate practices.

Agentic Architecture

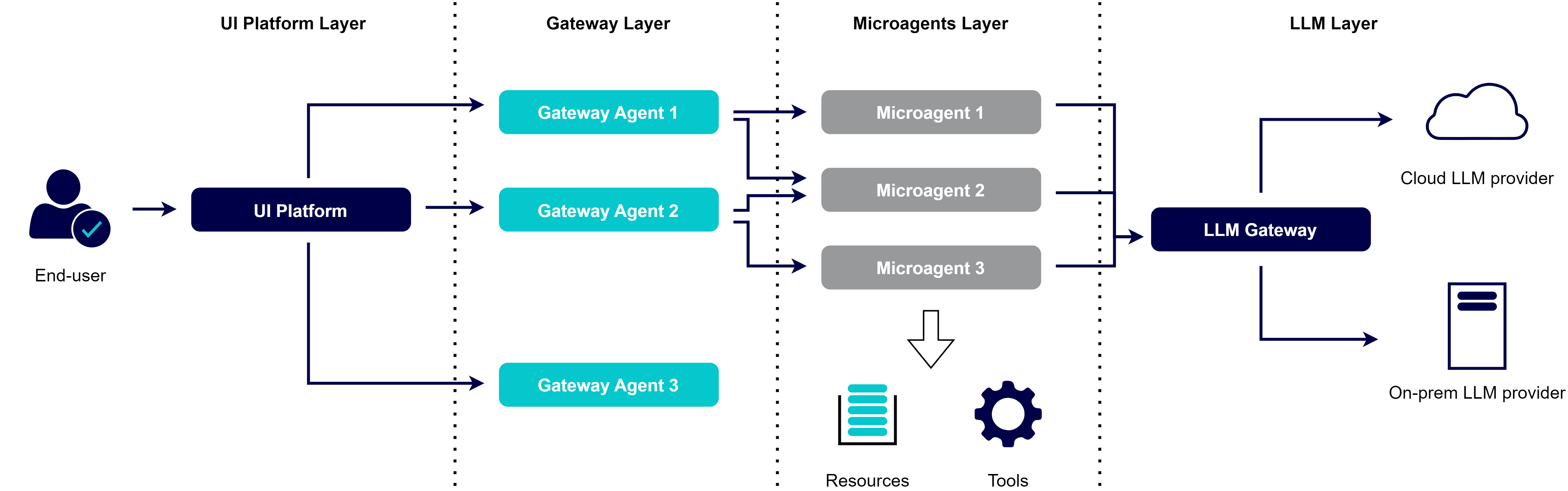

Designing an agentic architecture necessitates additional considerations and choices. Below is a reference diagram depicting an agentic platform equipped with user interface (UI) capabilities.

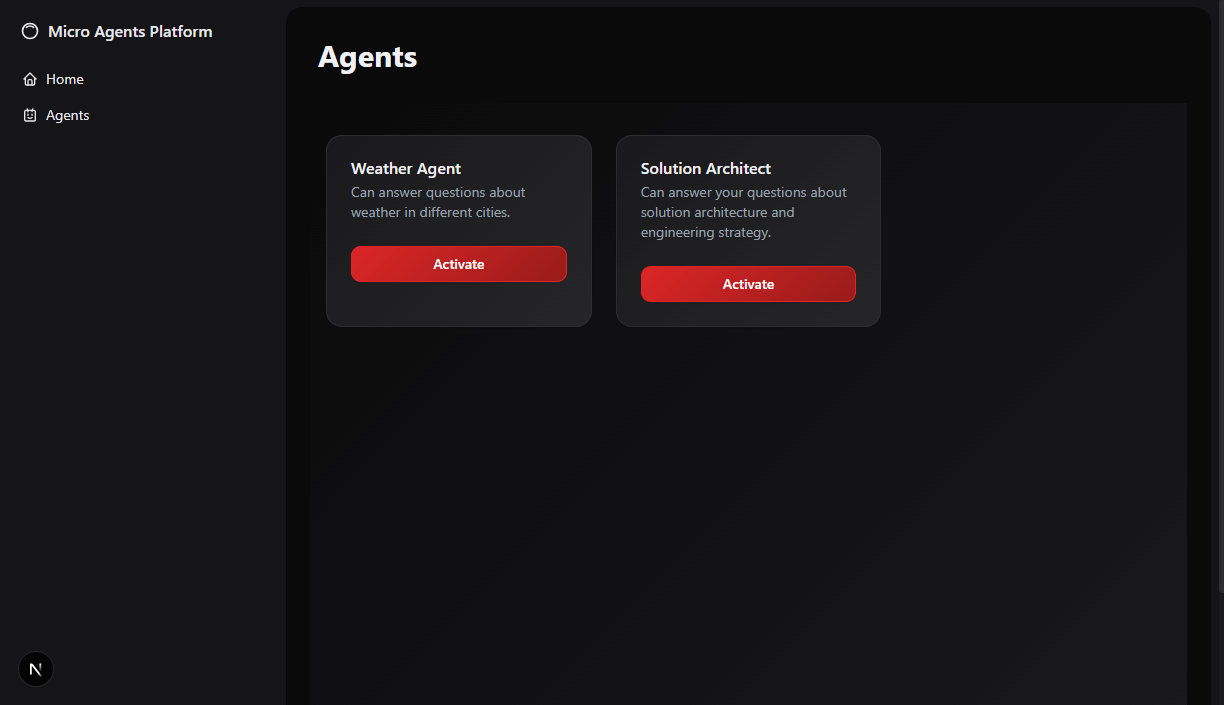

The UI Platform acts as the entry point, allowing end users to authenticate and view available agents based on their authorization. Some agents may function autonomously, with users only reviewing and approving their tasks as needed. Others could be user-centric assistants, helping users achieve their goals.

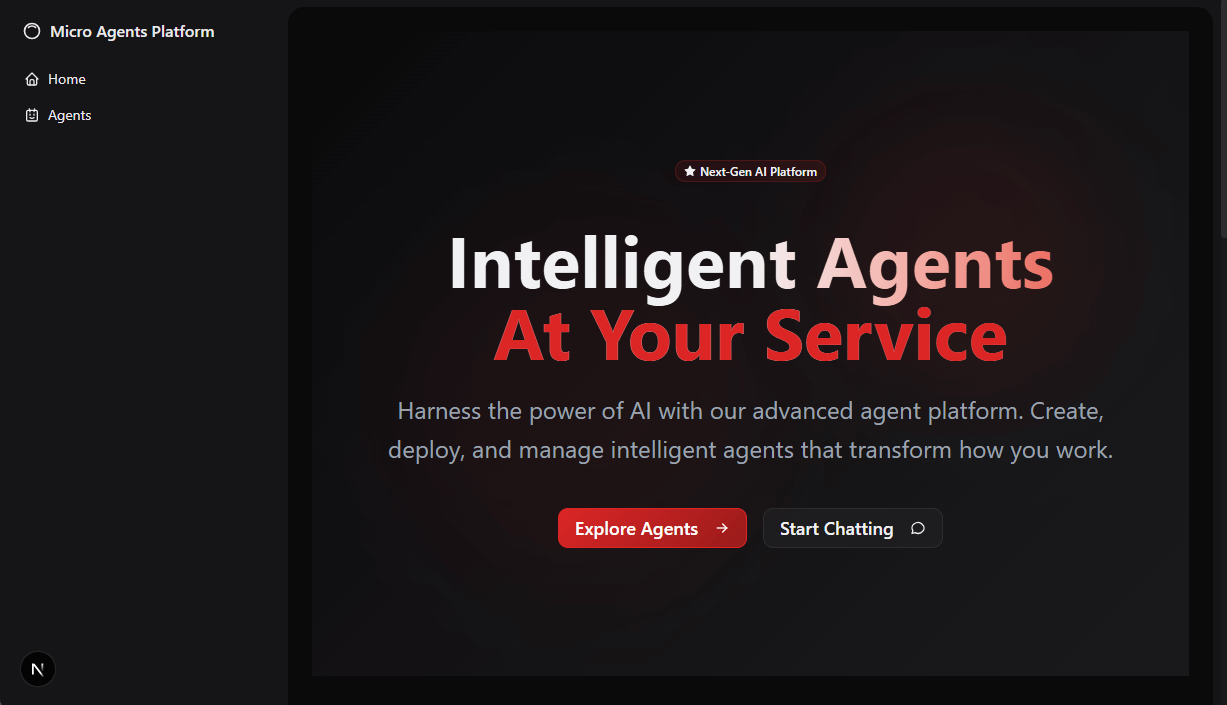

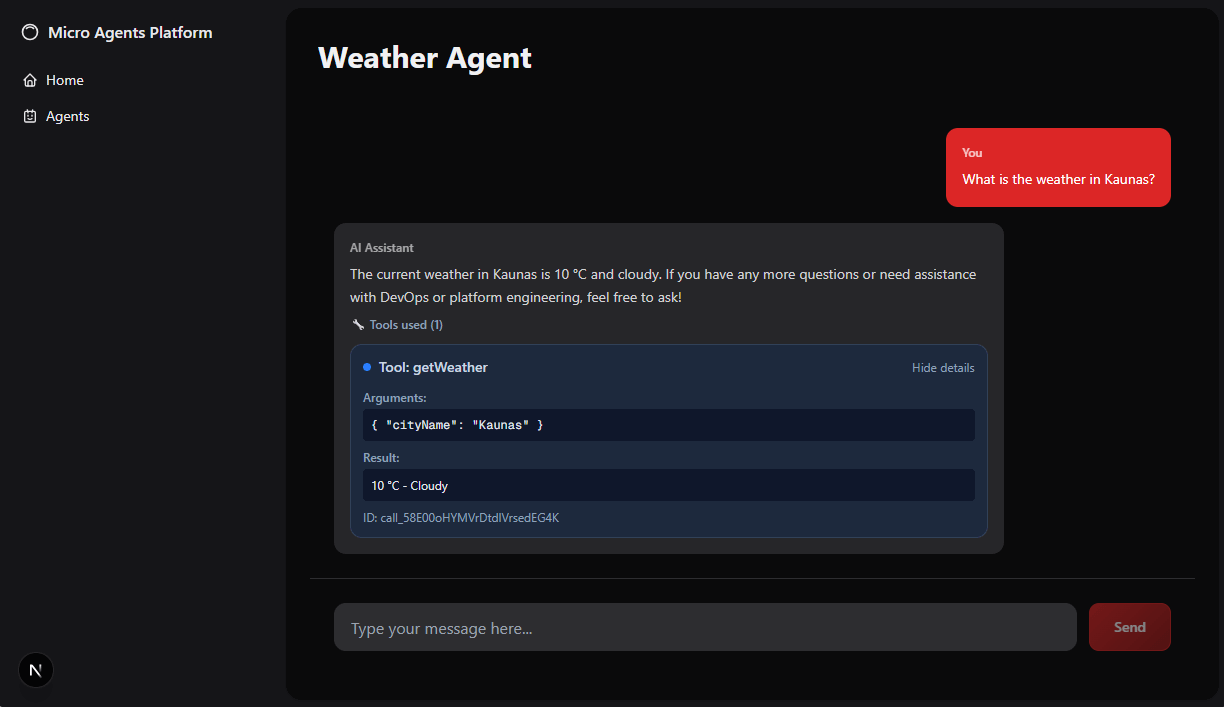

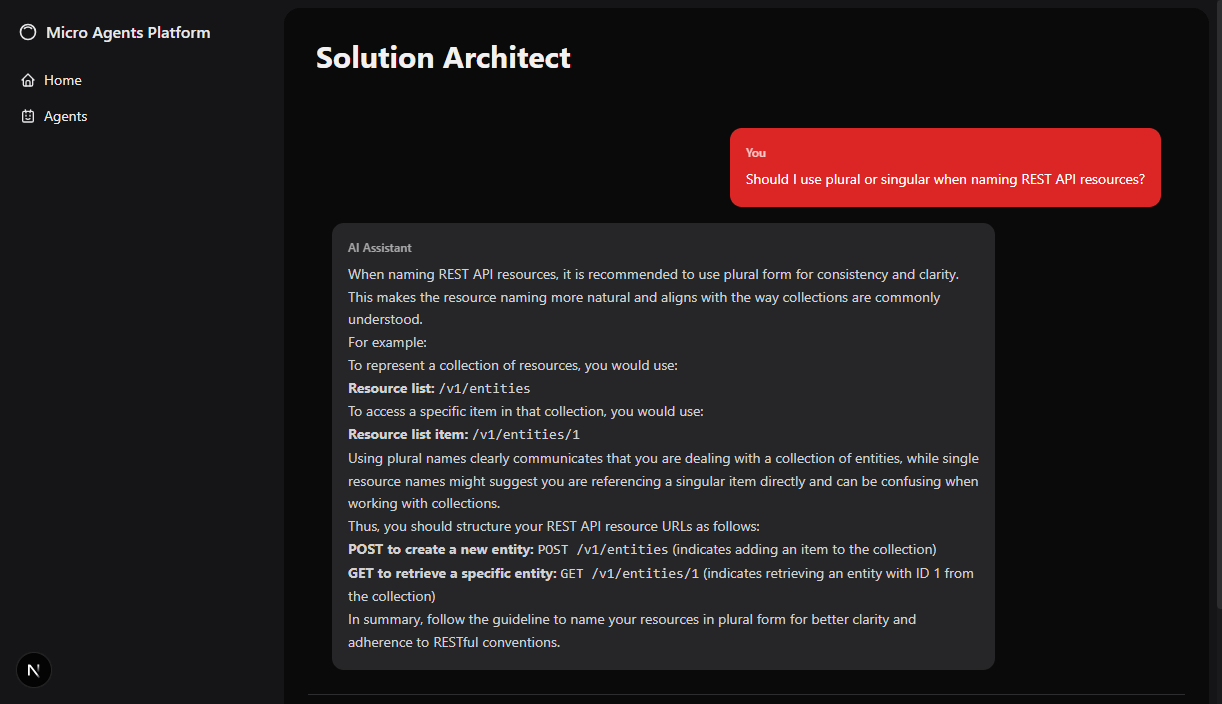

For inspiration, I’m sharing some screenshots of what the UI platform could look like based on my prototype:

Adjacent to this layer are gateway agents, encapsulating specific capabilities. From a functional perspective, these agents resemble managers, accepting requests, analyzing them, and delegating tasks within their team. In simpler scenarios, a gateway agent might function independently without the need for partner agents (e.g. Gateway Agent 3). Since Gateway Agents connect with the UI Platform, they require a communication interface that can facilitate interaction, such as REST APIs for conversation endpoints.

The UI Platform must also identify which agents exist within the ecosystem, demanding a discoverability function. The A2A specification includes the concept of an Agent Card, where each agent exposes an endpoint (https://{server_domain}/.well-known/agent-card.json) that delivers metadata, including name, description, and capabilities. Thus, by aggregating each agent’s greeting card, the UI Platform can perform automated discovery and registration for the entire collection of agents.

Gateway Agents communicate with Microagents via the A2A protocol. Serving as orchestrators, gateway agents delegate tasks to the appropriate microagents, collect partial responses, track overall task completion, and return final results to users through the UI platform. For internal communication, agents can use various transportation mechanisms: JSON-RPC, gRPC, or simply JSON over HTTP. Selecting the optimal transport mechanism significantly impacts the performance of the agentic system due to frequent internal calls.

Microagents utilize MCP to retrieve resources and activate tools. Each microagent may possess different tools, as a carpenter has different tools in their toolbox tailored to specific tasks - the right tool leads to the right job.

Finally, we have the LLM Layer, which includes an LLM Gateway and LLM providers. The LLM Gateway is a vital component when constructing LLM-as-a-service capabilities, serving as a single access point for various LLM models. This not only ensures security but offers other benefits as well. A centralized LLM catalog guarantees that appropriate guardrails and policies are in place. Additionally, a consolidated LLM Gateway facilitates cost tracking and evaluation to ensure agents don’t exceed their budgets. Moreover, the LLM Gateway can abstract details from agents, whether utilizing cloud services (e.g., Azure AI Foundry) or on-premises resources (e.g., llama.cpp running on local servers). This versatility allows the use of varied cloud providers alongside on-premise solutions without impacting the agents, provided the gateway interface remains intact.

Use Cases

With a solid understanding of how to construct an agent under our belts, it’s time to explore some example use cases. The sky’s the limit, so let your imagination run wild about the agents you’d want at your disposal.

Note: These examples are simplified and should not be directly implemented in production.

Technical Design Reviewer

Prompt:

You are an experienced software architect. Review this architecture decision record to evaluate if it provides enough context, compares possible options, and defines a reasoned decision. Focus on scalability, reliability, security, compliance, and standardization. Propose suggestions on how to improve this architecture decision record.

Resources needed:

- Example Architecture Decision Record (ADR) for template and structure

- Access to engineering strategy

- Access to other existing architecture decision records

User Experience Researcher

Prompt:

You are an experienced user experience researcher. Analyze the provided workflow against System X. Evaluate if the flow is intuitive, informative, and clear for users. Provide suggestions on how to enhance the flow and user interactions.

Resources needed:

- Flow specification

- Credentials to the testing account and environment

Tools needed:

- Web browser

Sales Intern

Prompt:

You are an IT consultant preparing a proposal for a potential client. Summarize our company’s capabilities and offerings. Use a professional and pleasant tone. Be concise and clear. Employ a standardized slide template.

Resources needed:

- Documents detailing company capabilities

- Documents about company service offerings

Tools needed:

- PowerPoint

Available Platforms

As agentic AI rides the wave of current hype, countless companies are developing services and capabilities aimed at providing agentic AI to clients.

- AWS offers Amazon Bedrock Agents;

- Azure is making significant strides in AI, building new capabilities within the AI Foundry platform and introducing the Microsoft Agent Framework;

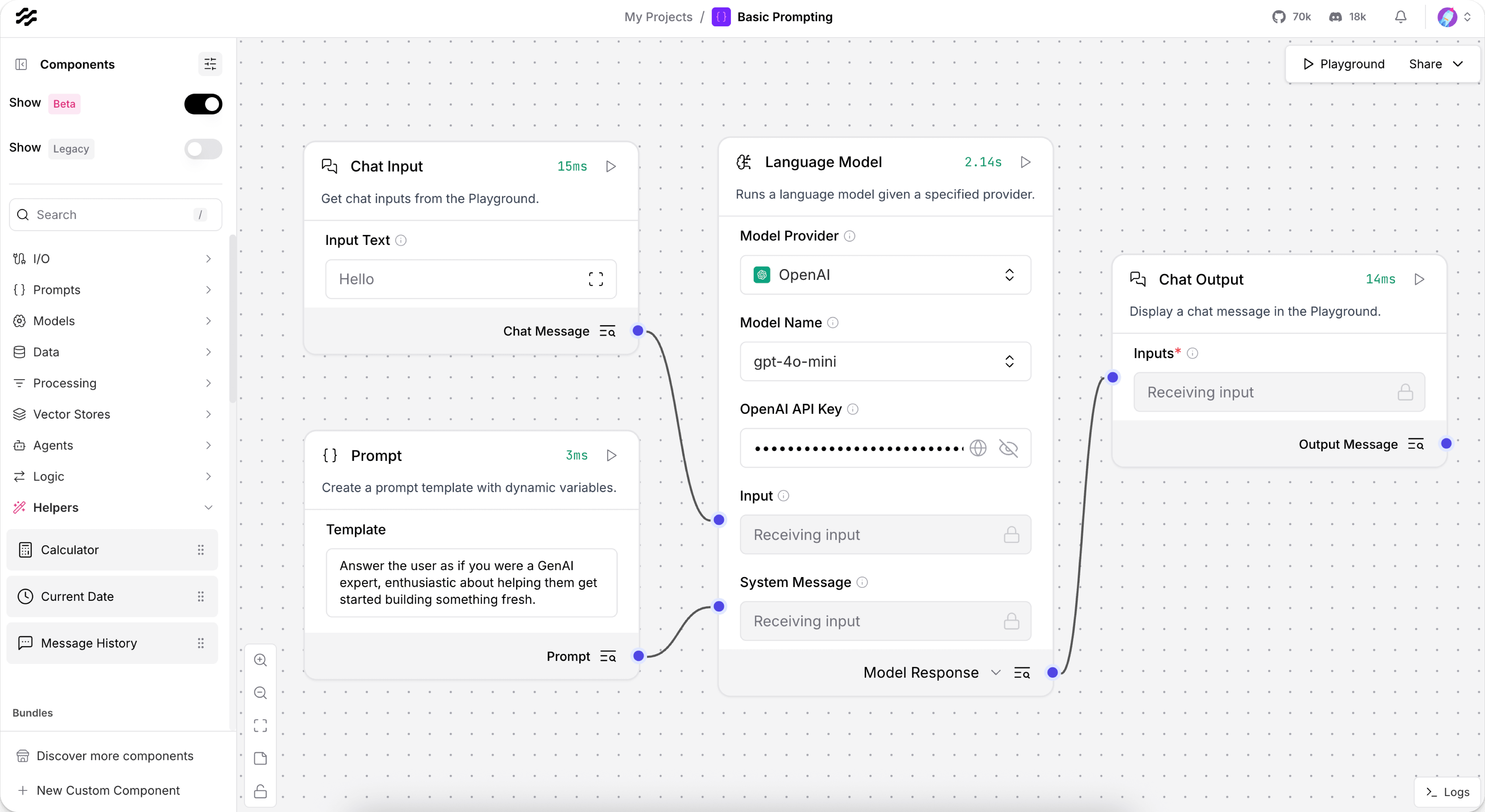

- Langflow is a low-code open-source framework for creating AI agents through a user interface or programmatic methods;

- GCP presents its Vertex AI Agent Builder;

- …and hundreds or thousands of other AI products sprouting from innovative startups;

Choosing the right AI tooling can feel like navigating a crowded marketplace, with new options sprouting up while others fade away. However, the cloud giants present a favorable balance of stability and capability, making them a solid starting point for building AI platforms.

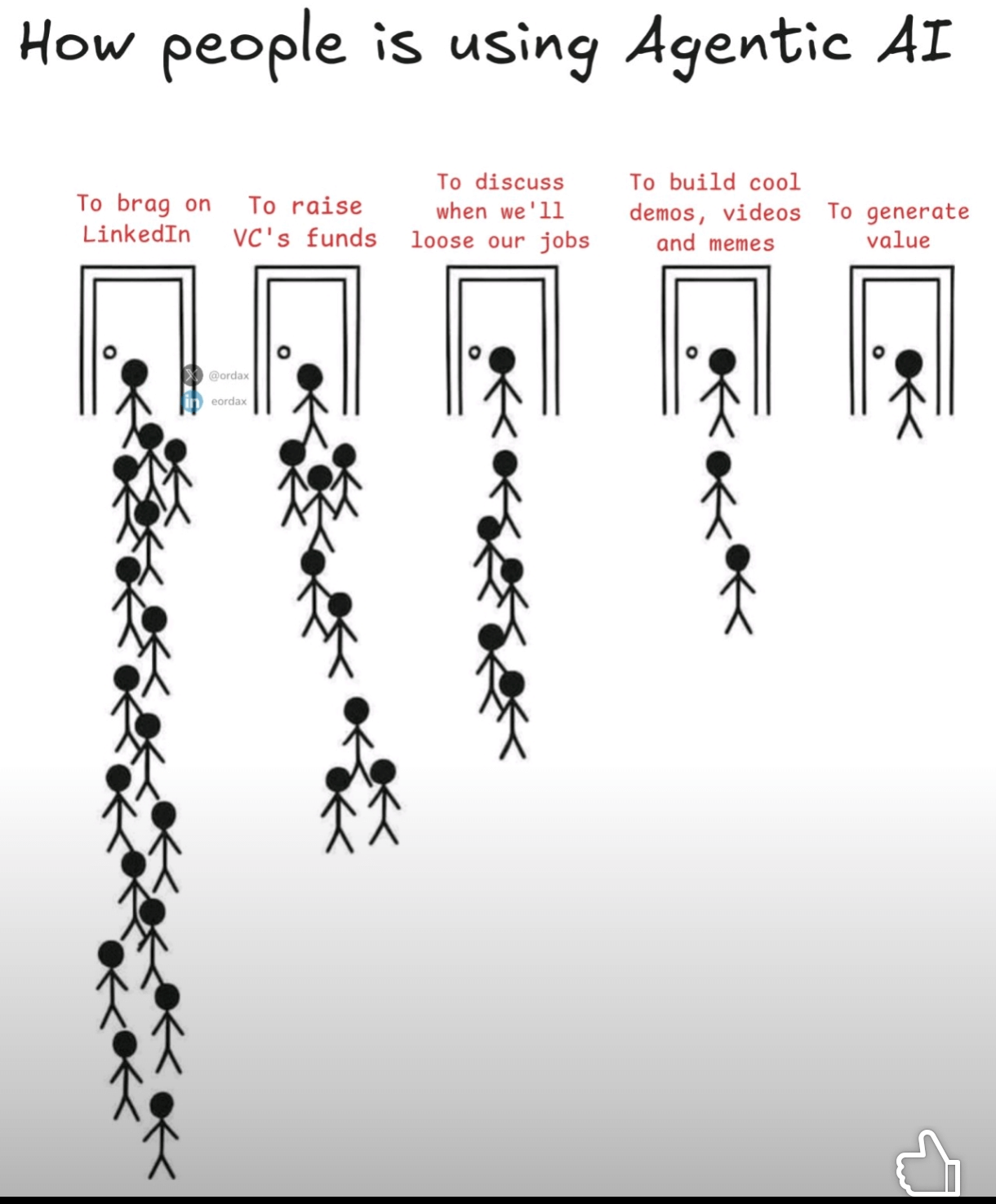

Battling the Hype

Understanding the mechanics of agentic AI and its development can help us cut through the hype surrounding it. Here are a few pointers to keep in mind:

- Fear of Missing Out: This sentiment is rampant among leaders, who worry that competitors might be making great strides with AI while they’re left in the dust. That concern is valid, but, as with any significant decision, emotional responses don’t make for sound strategy. By remaining strategic and thoughtful, we can craft the best approach to achieve our objectives.

- Fundamentals Over Latest Tooling: AI engineering is just beginning its journey. Hence, it’s crucial to grasp and apply core fundamentals before diving into the latest tools. Without a solid strategy, tactical decisions can spiral into chaotic instability.

- Improve What Matters: AI is just that - a tool. The main objectives of companies shouldn’t be “Use AI for everything.” Optimal company goals revolve around efficiency, cost management, and revenue generation. AI can play a role in achieving these objectives but is not the objective itself.

- Start Small and Iterate: Jumping into large-scale changes rarely ends well. Significant shifts and investments can be risky, creating stress for both leadership and teams. Instead, embrace smart investments, initiate small projects, assess impacts, make informed decisions, and refine your strategy over time. Regular iteration leads to better results.

- Set Expectations: Align with leadership on realistic goals. If goals seem unattainable or lack actionable outcomes, be candid about it and work collaboratively to improve them. Addressing these concerns early avoids accountability dilemmas later on.

Conclusion

I hope this post has been both enlightening and entertaining, offering fresh insights into agentic AI while demystifying some of the surrounding fog. The hype surrounding advancements in AI is inevitable, fueled by excitement over new capabilities and investor enthusiasm. However, when it comes to practical, achievable solutions, we must consider all eligible factors. Agentic AI is determined to transform our lives moving forward, so leverage this knowledge to embark on your journey in building agentic AI - beyond the hype!